Creating a radiologic technologist peer review program that is integrated with a PACS and managed electronically has revolutionized the way that quality assurance is handled within the radiology department at the University of Maryland Medical Center (UMMC) in Baltimore.

The university's Technologist Report Card program tracks radiologic technologists' (RT) performance at the clinical level, following critical events such as errors and omissions that may have occurred during exams. It combines this event reporting with a postexam peer review process that enables RTs to evaluate the work of their peers -- giving managers valuable insight into the skills of both judges and the judged.

The hospital has experienced good compliance with the program, as RTs find it a useful way to provide constructive criticism to their peers in a nonconfrontational environment.

Built on RadTracker

The University of Maryland Medical Center has made quality assurance in radiology a major priority, with a special focus on radiologic technologist performance. To that end, researchers at the university developed RadTracker, a software tool implemented in 2006 that enables radiologists to electronically notify both technologists and the department's modality supervisors of imaging errors and omissions relating to specific exams.

The university saw RadTracker as an immediate improvement over the paper-based event reporting system it replaced, with radiologists submitting 20 times more quality control issues using the electronic system, compared to when paper was used.

In addition, RadTracker enabled many issues to be resolved to everyone's satisfaction within 60 minutes, according to Paul Nagy, Ph.D., associate professor of radiology at UMMC, and radiologic technologist Benjamin Pierce, diagnostic radiology manager at the hospital.

But despite RadTracker's advantages, Nagy and Pierce wanted to go beyond isolated event reporting to create an ongoing system for managing image and data quality objectively, and for evaluating a technologist's knowledge of their profession. Pierce thought that the best way to evaluate and maintain technologists' skills would be to find out what their perception of a quality image was. Having the RTs evaluate each other's images would force them to pay more attention to their own technique.

This would be done by film critiques, a core component of radiography school basic training. In a film critique, a student is required to hang the films of an exam in the appropriate order; evaluate the images with respect to anatomy, pathology, and positioning; read the report; and explain what the contents of the report mean. This exercise reveals what a student knows, and everyone in the x-ray tech class benefits from it, Pierce said.

In December 2007, Pierce and Heather Damon, also a radiology manager at UMMC, proposed that the radiology department create an electronic version of film critiques. The department's Technologist Peer Review program was implemented several months later.

How it works

Every eight weeks, six technologists are assigned to review and grade 25 radiographs per week for a total of 200 exams. The radiographs represent images acquired in outpatient clinics, in the emergency and trauma departments, from portable x-ray equipment, and from fluoroscopy systems.

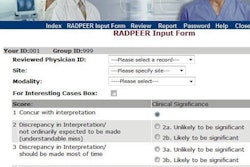

When one of these technologists logs into PACS, a worklist is displayed containing randomly selected exams. When the technologist selects an exam, the exam opens from the PACS, images are displayed, and an electronic grading sheet is displayed adjacent to the images. Each exam has six categories to be graded on a scale of one to five; if an RT grades an image as category 3 or lower, he or she is expected to type a written explanation of the rationale for grading, including an explanation of what is wrong with the exam.

Upon completion, the exam transfers to the worklist of one of four department supervisors or lead technologists who review the original grader's assessment. The reviewers either agree with the grader or disapprove their findings.

"When a tech evaluates 200 exams, it enables me to understand more of what he or she knows about an image rather than how well he acquires one. The evaluations provide insights about what our technologists know about anatomy, positioning, and the elements of a good image," Pierce said.

"If a tech who does not consistently produce images of excellent quality grades 100 exams, and Heather and I completely agree with their assessment, I can tell that technologist, 'You know what you are looking at, and you know the characteristics of a good exam. I am going to constructively work with you to create better quality exams yourself,' " Pierce explained.

A Technologist Report Card is created from RadTracker entries specific to the technologist, and from mining data from the Technologist Peer Review database. Supervisors review technologists' report cards on a monthly basis, made easier because data are represented in visual graphs. Technologists who are not meeting department standards are entered into a performance improvement plan, and supervisors are able to track improvement, or lack thereof, using the tools of the Technologist Report Card.

"When we have a technologist who is doing subpar work, having peers anonymously confirm that the exams are substandard eliminates any supervisor or radiologist bias that may be perceived," he said.

Pierce and Damon meet with each technologist upon completion of a peer review session to discuss the quality of their evaluations. The grade sheet is currently being modified to promote more feedback from the grader, so the radiology managers can build an accurate grader profile and completely understand each individual technologist's knowledge of the craft.

The radiology managers supervise more than 60 technologists and rely on e-mails to convey regular timely feedback, both negative and positive. The Technologist Report Card program enables them to intervene more rapidly when a technologist's work is not meeting the standards of the department so that training and corrective action can be taken.

"The purpose of the Technologist Report Card is to be constructive and supportive, not punitive," Pierce said. "However, there must come a point where punitive actions are taken."

Pierce emphasized that while the program's data are used in formal performance reviews, the reason it was implemented and its greatest value is to ensure ongoing continuity of quality and inevitably reduce the number of image and data errors within the university's radiology department.

By Cynthia E. Keen

AuntMinnie.com staff writer

October 16, 2009

Related Reading

Quality control in the digital environment: Not a luxury, a necessity, September 7, 2009

Web-based issue tracking system boosts radiology QC, April 4, 2008

Effective communication is crucial for radiology department success, March 20, 2008

Using informatics to meet communication challenges, February 7, 2008

Copyright © 2009 AuntMinnie.com