SAN ANTONIO - Commercial PACS networks are not designed to support comprehensive quality assurance (QA) practices; it’s up to the customer to implement these procedures, and radiologists have a pivotal role to play, according to Charles Willis, Ph.D., of the University of Texas M. D. Anderson Cancer Center (MDACC) in Houston.

"(The user) bears the burden of developing and implementing QA, because (the user) suffers the consequences of its absence," said Willis, an associate professor of the department of imaging physics. He spoke Thursday at PACS 2004: Working in an Integrated Digital Healthcare Enterprise, sponsored by the University of Rochester School of Medicine and Dentistry this week in San Antonio.

A number of errors can crop up in PACS implementations. Mistakes can occur in configuring PACS, including inappropriate software settings and values, outdated or inconsistent software versions, and incompatible software and hardware combinations, Willis said. PACS devices, such as monitors, computed radiography systems, film digitizers, laser cameras, analog interfaces, phototimers, etc., can also be improperly calibrated.

"Anything that has an analog component to it can be calibrated and miscalibrated," Willis said.

The methodology and frequency for calibrating PACS devices has not been well-established, and the consequences of miscalibration are not widely acknowledged, Willis said.

If improperly displayed, even the best electronic image looks terrible, he said. CRT monitors degrade over time, and the wrong display look-up table (LUT) can spoil a great electronic image, he said. This matter is addressed by the DICOM standard’s Part 14 grayscale display function.

Test patterns, notably the Society of Motion Picture and Television Engineers (SMPTE) pattern, can make display problems obvious, Willis said.

"You can’t distinguish all the problems that you might have, but it gives you a good, warm and fuzzy feeling that your monitors are OK," he said.

Other errors that can come up in PACS include design flaws -- some software and hardware features either don’t function or act in an undesired manner. In addition, the software may not support some processes that are absolutely required for clinical practice.

Users may be faced with limited connectivity, including incomplete or incompatible DICOM interpretations, and divergence from the Integrating the Healthcare Enterprise (IHE) initiative, Willis said. Software design principles may not have been adhered to, and reliability engineering principles may not have been applied.

Human factorsIt’s also important to factor in the inherent limitations of human operators, Willis said.

Processes that depend on humans are a source of random errors, while automated processes contribute systematic errors.

"Human errors increase exponentially with the complexity of the system and operator interface," he said. "Quality control processes must be in place to detect and rectify errors."

Degraded performance results in quantifiable consequences, such as loss of contrast sensitivity, loss of sharpness/spatial resolution, loss of dynamic range, increase in noise, decrease in system speed, geometric distortion, and artifacts, Willis said.

Improperly identified images can also cause problems, including unavailability of the image. Incorrect exam information can affect image development, and misassociation complicates the detection of errors, he said. And with the proliferation of digital images around the enterprise, the correction process is complicated.

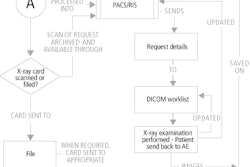

Implementing automated association methods can help, including RIS interfaces, bar code scanner augmentation, and DICOM modality worklist management. Quality assurance involves making sure that the devices are properly operated, the devices operate properly, and that the devices are properly maintained, Willis said.

The process must consider the entire imaging chain from acquisition to display, and must regard the human operator as an integral part of the system, he said. Even with PACS, bad practice still translates into bad images, Willis said. Automation can’t correct for patient motion, bad positioning, improper collimation, etc.

"Image processing is a poor substitute for proper examination technique," he said.

In a filmless environment, the technologist/supervisor must accept responsibility for appropriate delivery of all images to the physician, he said. Processes must be in place to verify that all exams performed and all images acquired reach their intended destinations; there should also be processes to correct errors when detected.

Image-reject analysis needs to be part of a quality assurance plan, and a method should be developed for capturing rejects. Data should be collected and analyzed, with results reported to management and staff, Willis said. Training should be implemented, as indicated, with results shared with vendors.

The best type of maintenance is preventive maintenance, Willis said. Calibrations need to be performed as scheduled, and operators need to clean, inspect, and document.

"We’ve found that start-of-shift routines or checklists are helpful," he said.

Preventive maintenance should be scheduled to take place at the convenience of the clinical operation, he said. Also, software upgrades are major service events that demand re-verification of proper function, he said.

Human operators have a right to know what is expected of them in a PACS environment, and vendor applications training is not sufficient. As such, local policies and practice must be developed, communicated, documented, reinforced, and enforced, Willis said.

Developing clinical competency criteria are helpful for standardizing and documenting basic proficiency training, Willis said. Training must also be tailored for the different types of personnel that use PACS, he said.

The radiologist roleQA costs time and money. And radiologists must emphasize QA, as they control resources and priorities, Willis said.

"Radiologists set the standard: hospital staff can only produce the lowest level of quality that is acceptable to the radiologists," he said. "Radiologists must demand accountability for image quality and availability, and must enthusiastically support the QA effort."

Often, PACS service interruptions are not planned for sufficiently, Willis said. Users need to know the answers to the following:

- How is PACS affected by the loss of utility services, i.e. power, HVAC, or network?

- How do I maintain continuity of clinical operations during downtime of an individual PACS component?

- Can local components operate during downtime of a central PACS component (database, archive, gateway, RIS, or RIS interface)?

- How does PACS recover after service is restored?

Software upgrades are also a source of service interruptions, Willis said.

Special attention must be given to the image database, which is absolutely critical for continued operations and recovery, Willis said. Performing daily backups to tape are insufficient, as the most critical exams are the most recent.

And reconstruction from physical media is too slow, he said. Parallel, non-concurrent databases are employed at Willis’ institution that operate in "near real-time."

In conclusion, Willis said that errors will always occur in PACS; QC is the key to detecting errors, while training is the key to averting them. Reliability engineering is the key to continuity of clinical operations, and disaster recovery is the key to restoring normal clinical operations.

"Quality assurance includes all the above," he said.

By Erik L. RidleyAuntMinnie.com staff writer

March 12, 2004

Related Reading

State x-ray quality assurance program yields dividends, January 23, 2004

DR is tolerant, but not foolproof, June 10, 2003

PACS displays raise myriad issues, March 14, 2003

Digital departments need rigorous QA program, May 5, 2002

Copyright © 2004 AuntMinnie.com