A team at Northwestern University in Chicago has developed an AI model that they say could serve to alert emergency physicians to life-threatening diseases on chest x-rays, according to a study published October 5 in JAMA Network Open.

In the study, six emergency medicine physicians evaluated the AI model’s performance compared to standard clinical radiology x-ray reports and found the methods nearly equal in terms of accuracy and textual quality.

“Implementation of the model in the clinical workflow could enable timely alerts to life-threatening pathology while aiding imaging interpretation and documentation,” wrote first author Jonathan Huang, a fourth-year medical student, and colleagues.

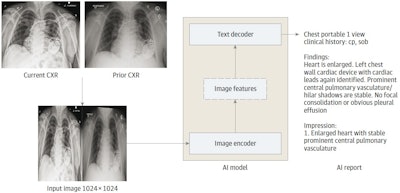

The model is one of a relatively new class of generative AI called transformer models. These multimodal models use large language models (LLMs) similar to ChatGPT to generate textual descriptions and are paired with deep learning models for image interpretation.

In this study, the group’s aim was to develop a generative AI tool for chest x-ray interpretation and retrospectively evaluate its performance in an emergency department setting.

“In light of rising imaging utilization in the ED, systems for providing prompt interpretation have become increasingly important to streamline emergency care,” the researchers wrote.

To train the model, the researchers used 900,000 chest x-ray reports that included a radiologist’s text on findings. Briefly, the AI tool is a transformer-based encoder-decoder model that takes chest radiograph images as input and generates radiology report text as output, the authors noted.

To test the model, they used a sample of 500 x-rays from their emergency department that had been interpreted by both a teleradiology service and an on-site attending radiologist from January 2022 to January 2023. These two reports were then compared one by one with reports generated by the AI model by six emergency department physicians using a 5-point Likert scale.

The AI model is an encoder-decoder model trained to generate a text report given a chest x-ray and most recent comparison (anterior-posterior or posterior-anterior view only). The vision encoder weights were initialized from Vision Transformer (ViT) base and the text decoder weights were initialized from Robustly Optimized BERT Pretraining Approach (RoBERTa) base before training for 30 epochs on a data set of 900,000 chest x-rays (CXRs). cp indicates chest pain; sob = shortness of breath. Image courtesy of JAMA Open Network.

The AI model is an encoder-decoder model trained to generate a text report given a chest x-ray and most recent comparison (anterior-posterior or posterior-anterior view only). The vision encoder weights were initialized from Vision Transformer (ViT) base and the text decoder weights were initialized from Robustly Optimized BERT Pretraining Approach (RoBERTa) base before training for 30 epochs on a data set of 900,000 chest x-rays (CXRs). cp indicates chest pain; sob = shortness of breath. Image courtesy of JAMA Open Network.

There were 336 normal x-rays (67.2%) and 164 abnormal x-rays (32.8%), with the most common findings being infiltrates, pulmonary edema, pleural effusions, support device presence, cardiomegaly, and pneumothorax.

According to the results, there was a significant association between the report type and ratings, with an analysis revealing that the physicians gave significantly greater mean scores to AI (3.22) and radiologist reports (3.34) compared with teleradiology reports (2.74).

Further stratification of reports by presence of cardiomegaly, pulmonary edema, pleural effusion, infiltrate, pneumothorax, and support devices also yielded no difference in the probability of containing no clinically significant discrepancy between the report types, the researchers wrote.

“The generative AI model produced reports of similar clinical accuracy and textual quality to radiologist reports while providing higher textual quality than teleradiologist reports,” the group wrote.

Ultimately, timely interpretation of diagnostic x-rays is a crucial component in clinical decision-making in emergency settings, yet free-standing departments may lack dedicated radiology services and centers may not provide off-hours coverage, the researchers noted. This leaves a gap, they wrote.

“Generative AI methods, which generate data such as text and images following user direction, may bridge this gap by providing near-instant interpretations of medical imaging, supporting high case volumes without fatigue or personnel limitations,” the group concluded.

The full article can be found here.