AI could help streamline workflows for breast imagers, but it needs some fine-tuning to lower recall rates, according to a study published September 3 in the American Journal of Roentgenology.

An AI system reached near-perfect negative predictive value (NPV) in both digital mammography and digital breast tomosynthesis (DBT) in a real-world population-based study, but almost doubled the recall rate compared with radiologists, wrote a team led by Iris Chen from the University of California, Los Angeles.

“The findings support AI’s potential to aid radiologists’ workflow efficiency,” the Chen team wrote. “Yet, strategies are needed to address frequent false-positive results, particularly in the intermediate-risk category.”

Researchers continue to explore AI’s potential to make workloads more efficient for radiologists. This could help radiologists spend less time interpreting images at high volumes and lessen the risk of burnout.

Previous studies suggest that defining the AI-based intermediate-risk category as a positive result could lead to more cancers being found. However, this could lead to more false-positive cases by recalling all cases in this category.

Chen and colleagues compared the diagnostic performance of a commercially available AI system (Transpara v1.7.1, ScreenPoint Medical) with that of radiologists. The system classified breast exams as positive at two different thresholds (elevated risk versus intermediate/elevated risk). The team focused on NPV and recall rates for its study.

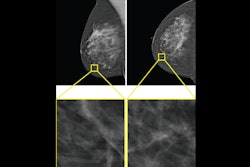

Craniocaudal (left) and mediolateral oblique (right) digital mammography images of the left breast were assessed by interpreting radiologist as BI-RADS category 1, consistent with negative result. The AI system flagged asymmetry in lateral breast on the craniocaudal view (circle) and categorized examination as intermediate risk, consistent with negative or positive result depending on threshold used for categorizing AI results. The patient was not diagnosed with breast cancer within one year after screening examination, consistent with negative outcome according to present study’s reference standard. Thus, interpretation by radiologist was true negative and by AI system was positive if defining both intermediate-risk and elevated-risk categories as positive. Annotation was not generated by the AI system but rather was recreated by present authors based on AI output coordinates.the ARRS.

Craniocaudal (left) and mediolateral oblique (right) digital mammography images of the left breast were assessed by interpreting radiologist as BI-RADS category 1, consistent with negative result. The AI system flagged asymmetry in lateral breast on the craniocaudal view (circle) and categorized examination as intermediate risk, consistent with negative or positive result depending on threshold used for categorizing AI results. The patient was not diagnosed with breast cancer within one year after screening examination, consistent with negative outcome according to present study’s reference standard. Thus, interpretation by radiologist was true negative and by AI system was positive if defining both intermediate-risk and elevated-risk categories as positive. Annotation was not generated by the AI system but rather was recreated by present authors based on AI output coordinates.the ARRS.

The study also included 11 interpretating breast radiologists with one to 40 years of post-training experience. The radiologists did not use AI in their interpretations and the researchers did not track the number of breast imaging exams each radiologist interpreted.

The digital mammography cohort included 26,693 exams in 20,409 women with an average age of 58 years. AI classified 58.2% of exams as low-risk, 27.7% as intermediate risk, and 14% as elevated risk, respectively.

The DBT cohort included 4,824 exams in 4,379 women with an average age of 61.3 years. AI classified 68.1% of exams as low-risk, 19.8% as intermediate risk, and 12.1% as elevated risk, respectively.

In both groups, AI interpretation led to high NPVs for both elevated risk and intermediate/elevated risk thresholds. However, it also led to higher recall rates compared to those of the radiologists, with the intermediate/elevated risk threshold having the highest recall rates.

| Performance of AI system at different thresholds and radiologists without AI | ||||||

|---|---|---|---|---|---|---|

| Digital mammography | DBT | |||||

| Measure | Radiologists | AI (elevated risk) | AI (intermediate/elevated risk) | Radiologists | AI (elevated risk) | AI (intermediate/elevated risk) |

| Sensitivity | 88.6% | 74.4% | 94% | 83.8% | 78.4% | 89.2% |

| Specificity | 93.3% | 86.3% | 58.6% | 93.7% | 88.4% | 68.5% |

| Recall rate | 7.2% | 14% | 41.8% | 6.9% | 12.1% | 31.9% |

| NPV | 99.9% | 99.8% | 99.9% | 99.9% | 99.8% | 99.8% |

The high NPV achieved by AI and the higher proportion of mammograms the AI system classified as low risk suggests that radiologists with AI assistance can have their work streamlined more, the researchers highlighted. This could lead to radiologists turning their attention more toward complex cases rather than negative exams.

“Such an approach could substantially improve workflow efficiency, reduce interpretation fatigue, and better allocate healthcare resources,” the study authors wrote.

They also presented two possible reasons why AI interpretation led to higher recall rates. These include the AI system not having the ability to incorporate data from prior imaging exams into its assessments and the AI system processing only the tomosynthesis images and not having access to synthetic mammographic images.

“Large prospective studies remain needed to understand the optimal approach for radiologist-AI integration, particularly in the context of intermediate-risk results, for reducing false-positive recalls while maintaining high cancer detection rates,” the authors concluded.

Read the full study here.