Radiologists are crying foul in the wake of dismal virtual colonoscopy results reported yesterday by researchers from the Medical University of South Carolina in Charleston. Proponents of the virtual exam said the study relied on antiquated methods and poorly trained radiologists, all but guaranteeing mediocrity.

MUSC in the scheme of things

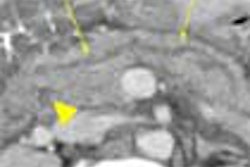

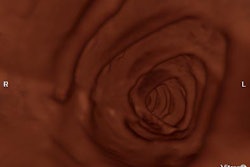

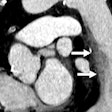

The study in question, a nine-center MUSC trial completed in 2001 but published only yesterday in the Journal of the American Medical Association, examined 600 subjects with both virtual and conventional colonoscopy. It found VC’s per-patient sensitivity to be just 55% for lesions 10 mm and larger and 39% for lesions 6 mm and larger, compared to 99% and 100% sensitivity, respectively, for conventional colonoscopy (JAMA, April 14, 2004, Vol. 291:14, pp. 1713-1719,1772-1774).

"I certainly wouldn't recommend (virtual colonoscopy) now," lead investigator Dr. Peter Cotton, director of MUSC's digestive disease center, told USA Today. "This is a bucket of cold water to say, 'Let's back off a minute.'"

Several radiologists who spoke with AuntMinnie.com disagreed.

“The recent poor results reported in JAMA should not discourage well-trained readers of virtual colonoscopy from offering this test for colon cancer screening,” wrote Dr. Abraham Dachman, director of CT at the University of Chicago, in an e-mail to AuntMinnie.com. "Given the poor job we are doing at convincing the public to get screened for colorectal cancer, the public response to virtual colonoscopy should not be dampened, but the accuracy of the test should be properly explained.... The data summarized by Sosna et al are a more reasonable way to represent the diagnostic performance data of virtual colonoscopy” (American Journal of Roentgenology, December 2003, Vol. 181:6, pp. 1593-1598).

Sosna and colleagues’ meta-analysis found 14 studies that fulfilled their inclusion criteria (a total of 1,324 patients and 1,411 polyps). The pooled per-patient sensitivity for polyps 10 mm or larger was 88%, and 84% for polyps 6-9 mm -- results that were in line with those of the only experienced virtual colonoscopy reader in the MUSC study, Dachman noted.

A study published in December's New England Journal of Medicine was even more encouraging, he said.

In that study, Dr. Perry Pickhardt and colleagues found virtual colonoscopy’s sensitivity to be 88.7% for polyps 6 mm and larger, 93.9% for polyps 8 mm and larger, and 93.8% for lesions 10 mm and larger. In fact, conventional colonoscopy's sensitivity trended slightly lower: 92.3%, 91.5%, and 87.5%, respectively, in a cohort of 1,233 asymptomatic patients (NEJM, December 4, 2003, Vol. 349:23, pp. 2191-2200).

According to Dr. Matthew Barish, associate professor of radiology at Harvard Medical School in Boston, Pickhardt's study tried to address shortcomings that had held back virtual colonoscopy's performance in earlier trials. In contrast, he said, the MUSC study failed to account for some of VC's most basic requirements, using, for example, well-trained gastroenterologists for conventional colonoscopy while relying on trainees for virtual colonoscopy.

"It would be the equivalent of having a VC study where we went out and found untrained gastroenterologists, let them do 10 conventional colonoscopies, and then compared them to virtual colonoscopy, and then tried to publish that data," Barish said. "This is the best of the best compared to essentially the worst of the worst for virtual colonoscopy.”

According to Dr. Judy Yee, vice chair of radiology at the University of California, San Francisco, the lack of training "is a fatal error in the methodology of this study, and likely contributed to the poor performance of (virtual colonoscopy)."Trial and error

In an editorial accompanying the MUSC study, Dr. David Ransohoff, an epidemiologist from the University of North Carolina at Chapel Hill, concurred that the MUSC study represented “what virtual colonoscopy would do” versus “what it could do.”

"(T)he differences between what virtual colonoscopy can do and what it will do if applied in ordinary-practice circumstances are so great that physicians must be cautious,” Ransohoff wrote. “There are many important steps yet to be taken in learning how to implement this new technology appropriately.”

But radiologists who spoke with AuntMinnie.com said that much of what the MUSC group missed was known long before the study was completed in 2001 -- particularly the need for adequate reader training.

“It has been repeatedly emphasized at international conferences that the minimum requirement for radiology performance might be 50 cases involving colon pathology, and that radiologists should attend training seminars and workshops where galleries of images are reviewed and the pitfalls of interpretation examined," wrote Dr. James Ehrlich, medical director of Colorado Heart & Body Imaging in Denver, in an e-mail to AuntMinnie.com. “The present study was primarily authored by a gastroenterologist ignoring this standard and any uniformity of equipment and technique -- one has to question the ultimate motivation for designing such a study that could achieve such dismal results.”

Barish echoed the need for adequate training before attempting virtual colonoscopy.

“This is not a technique that should be used by people without experience,” Barish said. “That’s why there are training centers -- at least six or seven training centers across the U.S. And those centers would never dream of training on 10 cases and then releasing people to start doing the technique.”

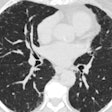

Barish also took issue with the MUSC study’s use of “very old equipment, primary 2D interpretation, limited 3D. They didn’t even have access to 3D problem-solving,” he said. “They actually did the reviewing on PACS, which is a very poor method of doing it. They didn’t use a dedicated workstation, either a primary 2D workstation or a primary 3D workstation.” Even MUSC’s apparent use of an open, or uncontrolled, bowel preparation favored conventional colonoscopy, he said, since most gastroenterologists use polyethylene glycol, which leaves too much residual fluid for optimal virtual colonoscopy.

“If you want to do virtual, then you really want to stick with some of the drier (saline) preps,” Barish said.

"In the Cotton study there was no description of the number of cases with adequate/optimal cleansing and distension," added UCSF's Yee. "It is the experience of many of us that suboptimal cleansing and/or distension severely limits the diagnostic ability of the study. There was no breakdown of the number of cases performed by site, so there is the possibility that some sites did relatively few cases and were not able to build experience."

Dr. Perry Pickhardt, associate professor of radiology at the University of Wisconsin Medical Center in Madison and principal investigator of December’s NEJM study, cited a number of shortcomings in the MUSC study’s design, including “poor 3D approach, no fecal tagging, and … 5-mm collimation on some of their studies.”

And rather than applying well-established methods of 2D primary review with 3D problem-solving, or vice-versa, the MUSC researchers apparently used one or the other with little correlation between the two, he said.

Yee noted that CT technology has evolved since the study was completed in 2001. "Thinner collimation of 1.25 mm may be acquired with 16-slice MDCT, and image display platforms have improved significantly," she said.

The radiologists were trained on a minimum of 10 cases without feedback, Pickhardt said. “One center did only 10 cases. So it basically shows that when you put garbage in you get garbage out -- we’ve already learned the lessons from this study,” he said. In fact, he said, it is rather disingenuous to present the 2001 MUSC study as new, when it is actually a “delayed publication of an old study that couldn’t find a journal.”

Other considerations

For his part, Ehrlich emphasized that conventional colonoscopy remains the reference colon screening procedure, “but (it) clearly does not achieve the accuracy claimed by the Cotton study (99%) and, in fact, has less capability than CTC (VC) to routinely visualize the entire colonic mucosa,” he wrote.

Barish concurred that the Cotton study’s claimed accuracy for conventional colonoscopy was suspiciously high. “Even the study that Rex did with back-to-back colonoscopy didn’t have 100% sensitivity,” he said (American Journal of Gastroenterology, September 2003, Vol. 98:9, pp. 2000-2005).

“That’s also why I don’t believe the segmental unblinding was done to the full extent, Barish said. “Every time a gastroenterologist went back to find a polyp in this particular study, they were not rewarded for finding a mistake. In other words, every time they missed something it would be a mistake, so how trusted could they be to go back and look for their errors?"

In his editorial accompanying the MUSC study, Ransohoff acknowledged the undercurrents of economic competition between radiologists and gastroenterologists in colon cancer screening, noting that “Gastroenterologists in practice or in training must prepare for a future with either increased demand or perhaps dramatically decreased demand because of new noninvasive tests based on radiological imaging, or on measuring DNA mutations in stool or proteins in blood."

Ransohoff also noted that there are clear benefits to be found in better collaboration between gastroenterologists and radiologists. “If positive virtual colonoscopy results can be evaluated with a same-day colonoscopy, patients can avoid a second bowel cleansing regimen.”

But Barish, and several other radiologists AuntMinnie.com spoke with, said that for one reason or another, virtual colonoscopy studies led by gastroenterologists almost always fail.

“I think the Pickhardt study (in December) sort of challenged the gastroenterologists, and lent another sort of boost to virtual colonoscopy," Barish said. “There’s a financial perspective…suggesting that they need to somehow limit or decrease the opportunities for VC -- for people’s enthusiasm for it. A few studies have been done like that, and most of them have been done by gastroenterologists.”

In his JAMA editorial, Ransohoff noted that there is plenty of opportunity for all.

“…Virtual colonoscopy will, at some point, become a clearly useful option for colon colorectal cancer screening,” he wrote. “This might well increase, not relieve, demand for colonoscopy because 40% of patients having virtual colonoscopy report that they would prefer standard colonoscopy for future screening…”

And, the University of Chicago's Dachman noted, “the addition of computer-aided detection will make the (virtual) exam easier for less-experienced radiologists to interpret with confidence.”

“If (Cotton et al) were a new study done with decent techniques, we’d have something to worry about,” Pickhardt said. “Bottom line is, short-term there’s going to be damage done to colorectal screening, but long-term this is just a little blip.”

By Eric BarnesAuntMinnie.com staff writer

April 15, 2004

Related Reading

CT colonography sensitivity low, April 14, 2004

Reduced-prep VC with tagging works after failed colonoscopy, April 7, 2004

CT colonography helpful in cancer screening, March 19, 2004

Virtual colonoscopy "almost ready" for widespread use as screening tool, December 2, 2003

Meta-analysis of virtual colonoscopy studies yields promising results, March 19, 2003

Copyright © 2004 AuntMinnie.com