At teaching hospitals, how residents are performing can have definite clinical implications. But data that tracks this performance are often meager.

At the 2007 RSNA meeting in Chicago, Dr. Matthew Chan of Detroit's Wayne State University School of Medicine and the Detroit Medical Center presented findings from a study he and colleagues performed to evaluate on-call radiology resident CT interpretation performance as compared with faculty radiologists' final reports.

"There have been many previous studies that examined residents' on-call performance," Chan said. "And the discrepancy rates (between residents and attendings) have always been low. But previous studies haven't used standardized methodology to evaluate resident performance."

The study was conducted using RADPEER, a system designed by the American College of Radiology (ACR) in 2002 that allows peer review to be performed during the routine interpretation of current images. RADPEER is based on a four-point scoring system, with 1 representing concurrence and 4 misinterpretation.

The group examined 502 overnight, radiology resident interpreted emergency department CT scans performed at a university-affiliated trauma center in August 2006. The group then compared these preliminary interpretations to faculty radiologists' final reports.

A panel of two residents and one faculty radiologist retrospectively scored discrepancies according to RADPEER criteria, and resident discrepancy rates were calculated and compared with already known discrepancy rates of faculty radiologists at the same institution.

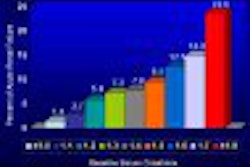

Chan and his colleagues found that:

- 0.8% (4) had discrepancies at RADPEER level 3 or 4, or "significant discrepancy"

- 4.4% (22) had discrepancies at RADPEER level 2, or "difficult case disagreement"

- 94.8% (476) had no discrepancies or minor ones with no clinical significance

"The residents did quite well," Chan said. "The vast majority of cases received a score of one, with only a few scoring at three or four."

And there was more good news: The group did not find significant differences in the discrepancy rates between second-, third-, and fourth-year radiology residents; nor did it find significant differences in the discrepancy rates of the faculty radiologists.

Chan acknowledged that one of the study's limitations was the order of image interpretation: since the residents interpreted first, the attendings had the benefit of this initial interpretation. But overall, he and his team concluded that RADPEER can be adapted to keep track of resident performance as part of a continuous quality improvement effort.

"Although we retrospectively assigned RADPEER scores to resident-interpreted cases, the program could also be integrated into the daily routine of resident post-call 'read-out' sessions," Chan said.

By Kate Madden YeeAuntMinnie.com staff writer

January 1, 2008

Related Reading

Residents' reads reliable, study says, September 19, 2007

ABR discusses changes in residency requirements, June 6, 2007

Major changes in the radiology residency program requirements are coming, December 5, 2006

Simple tracking program, case review scale back resident misreads, November 30, 2006

Revisiting report errors serves as educational tool for residents, December 22, 2005

Copyright © 2008 AuntMinnie.com