General-purpose large language models (LLMs), such as GPT-4, can be adapted to detect and categorize multiple critical findings within individual radiology reports, using minimal data annotation, researchers have reported.

A team led by Ish Talati, MD, of Stanford University, with colleagues from the Arizona Advanced AI and Innovation (A3I) Hub and Mayo Clinic Arizona, retrospectively evaluated two "out-of-the-box" LLMs -- GPT-4 and Mistral-7B -- to see how well they might perform at classifying findings indicating medical emergency or requiring immediate action, among others. Their results were published on September 10 in the American Journal of Roentgenology.

Timely critical findings communication can be challenging due to the increasing complexity and volume of radiology reports, the authors noted. "Workflow pressures highlight the need for automated tools to assist in critical findings’ systematic identification and categorization," they said.

The study demonstrated that few-shot prompting, incorporating a small number of examples for model guidance, can aid general-purpose LLMs in adapting to the medical task of complex categorization of findings into distinct actionable categories.

To that end, Talati and colleagues evaluated GPT-4 and Mistral-7B on more than 400 radiology reports -- 252 selected from the MIMIC-III database of deidentified health data from patients in the ICU at Beth Israel Deaconess Medical Center from 2001 to 2012, and an external test set of 180 chest x-ray reports extracted from the CheXpert Plus database at Stanford Hospital.

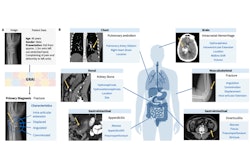

Analysis covered varying modalities (56% CT, ~30% radiography, 9% MRI, for example) and anatomic regions (mostly chest, pelvis, and head). The 252 reports were divided into a prompt engineering tuning set of 50, a holdout test set of 125, and a pool of 77 remaining reports used as examples for few-shot prompting.

With a board-certified radiologist and software separately, manual reviews of the reports classified them at consensus into one of three categories:

- True critical finding (new, worsening, or increasing in severity since prior imaging)

- Known/expected critical finding (a critical finding that is known and unchanged, improving, or decreasing in severity since prior imaging)

- Equivocal critical finding (an observation that is suspicious for a critical finding but that is not definitively present based on the report)

The models analyzed the submitted report and provided structured output containing multiple fields, listing model-identified critical findings within each of the three categories, according to the group. Evaluation included automated text similarity metrics (BLEU-1, ROUGE-F1, G-Eval) and manual performance metrics (precision, recall) in the three categories.

Precision and recall comparison for LLMs tracking true critical findings | ||

Type of test set and classification | GPT-4 | Mistral-7B |

Precision | ||

| Holdout test set, true critical findings | 90.1% | 75.6% |

| Holdout test set, known/expected critical findings | 80.9% | 34.1% |

| Holdout test set, equivocal critical findings | 80.5% | 41.3% |

| External test set, True critical findings | 82.6% | 75% |

| External test set, known/expected critical findings | 76.9% | 33.3% |

| External test set, equivocal critical findings | 70.8% | 34% |

Recall | ||

| Holdout test set, true critical findings | 86.9% | 77.4% |

| Holdout test set, known/expected critical findings | 85% | 70% |

| Holdout test set, equivocal critical findings | 94.3% | 74.3% |

| External test set, True critical findings | 98.3% | 93.1% |

| External test set, known/expected critical findings | 71.4% | 92.9% |

| External test set, equivocal critical findings | 85% | 80% |

"GPT-4, when optimized with just a small number of in-context examples, may offer new capabilities compared to prior approaches in terms of nuanced context-dependent classifications," Talati and colleagues wrote. "This capability is crucial in radiology, where identification of findings warranting referring clinician alerts requires differentiation of whether the finding is new or already known."

Though promising, further refinement is needed before clinical implementation, the group noted. In addition, the group highlighted a role for electronic health record (EHR) integration to inform more nuanced categorization in future implementations.

Furthermore, additional technical development remains required before potential real-world applications, the group said.

See all metrics and the complete paper here.