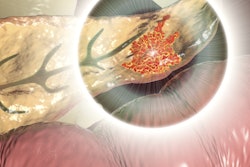

ChatGPT-4 can accurately classify pancreatic cysts on MRI and CT images, according to a study published July 16 in the Journal of the American College of Surgeons.

A team from Memorial Sloan Kettering Cancer Center in New York City found that the large language model (LLM) was "equivalent to human performance in identifying and classifying nine clinical variables used to monitor pancreatic cyst progression" -- a result that could improve patient care.

"The question I get asked most often is, 'What is the chance that this cyst is going to develop into cancer?'" study co-author Kevin C. Soares, MD, said in a statement released by the American College of Surgeons, publisher of the journal. "We now have an efficient way to look at the MRI and CT scans of thousands of patients and give [them] a better answer. This approach goes a long way to reduce anxiety and help patients feel more confident about their treatment decisions."

Pancreatic cysts are common and require ongoing surveillance, since some develop into cancer and require surgery, the researchers explained, but "manual curation of radiographic features in pancreatic cyst registries for data abstraction and longitudinal evaluation is time-consuming and limits widespread implementation."

AI could help with the task, the authors noted. To investigate its potential, the group -- led by first author Ankur Choubey, MD -- conducted a proof-of-concept study that used ChatGPT-4 to evaluate data from 3,198 MRI and CT scans of 991 adults under surveillance for pancreatic cysts, comparing the LLM's performance to radiologist readers. Choubey and colleagues tasked the LLM with identifying nine elements used to monitor cyst progression: cyst size, main pancreatic duct size, number of lesions, main pancreatic duct dilation equal to or more than 5 mm, branch duct dilation, presence of solid component, calcific lesion, pancreatic atrophy, and pancreatitis.

Overall, the researchers found ChatGPT-4 showed "near-perfect accuracy" when compared with radiologist chart review. They also reported the following:

- Among categorical variables (i.e., number of lesions and main pancreatic duct dilation), the LLM's accuracy ranged from 97% for solid component lesions to 99% for calcific lesions.

- Among continuous variables (i.e., cyst size and main pancreatic duct size), accuracy varied from 92% to 97%, respectively.

"ChatGPT-4 is a much more efficient approach, is cost-effective, and allows researchers to focus on data analysis and quality assurance rather than the process of reviewing chart after chart," Soares said. "Our study established that this AI approach was essentially equally as accurate as the manual approach, which is the gold standard."

The authors did caution that the study used only one AI source, and therefore, its results are limited to the data that was used. More research is needed, they wrote.

"Future application of this work may potentiate the development of artificial intelligence-based surveillance models," the group concluded.

The complete study can be found here.