ChatGPT can write coherent, fact-based scientific articles, right? Think again, suggests research from a U.K. study published April 14 in Skeletal Radiology.

A team led by Dr. Sisith Ariyaratne from the Royal Orthopedic Hospital in Birmingham found that while the artificial intelligence (AI) language tool may be able to write convincing articles for the untrained eye, all of the five radiology articles the group assessed were factually inaccurate and had fictitious references.

"While the language and structure of the papers were convincing, the content was often misleading or outright incorrect," Ariyaratne and colleagues wrote. "This raises serious concerns about the potential role of ChatGPT in its current state in scientific research and publication."

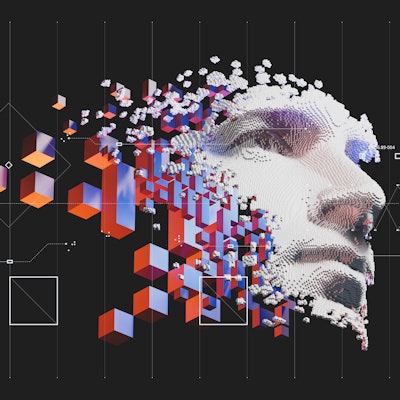

ChatGPT uses machine-learning algorithms to produce text that mimics the way humans talk. In health and medicine, research has explored the tool's viability and has sparked debate about its potential pros and cons, accuracy being one of them.

Ariyaratne and colleagues wanted to compare the accuracy and quality of five ChatGPT-generated academic articles with those written by human authors. Two fellowship-trained musculoskeletal radiologists analyzed these and graded them on a scale of 1 to 5. A score of 1 represented a ChatGPT article being inaccurate while a score of 5 meant that it was accurate.

The researchers found that four of the five articles written by ChatGPT were significantly inaccurate and contained fictitious references. They also reported that, although one of the papers was well written, with a good introduction and discussion, it still used fictitious references.

The authors wrote that while large language models such as ChatGPT may improve in the future, for now it may contribute to the spread of misinformation.

"Its close resemblance to authentic articles is concerning, as they could appear authentic to untrained individuals and, with its free accessibility on the internet, be an adjunct to propagation of scientific misinformation," they wrote.

Still, ChatGPT in research isn't without its potential benefits in science writing, the team noted. It wrote that the tool's ability to quickly process and analyze large volumes of data could be used in certain fields of study and that the format of articles generated by ChatGPT can be used as a draft template to write an expanded version of an article. But human experts should verify the ChatGPT-generated articles.

"Future research should continue to investigate the role of ChatGPT in scientific publication and explore ways to mitigate its limitations and maximize its potential benefits while also maintaining the ethical standards and quality of academic research," they concluded.