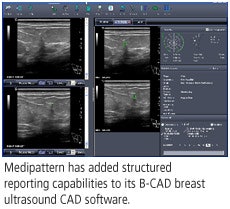

(Booth 5875) Medipattern of Toronto will feature recent developments for its B-CAD breast ultrasound computer-aided detection (CAD) software, including structured reporting capabilities.

Sonographers now have the ability to create an entirely digital worksheet that is sent to a PACS and included in an electronic record with images. As a result, the radiologist reads information from the worksheet on the same workstation used to view images, the company said.

Medipattern said that the software can help bring sites currently using manual sonographers' notes jotted on worksheets into digital workflow, and that it is an improvement over entering information manually and then scanning it into the PACS.

Sonographers also can view prior exams and reports in B-CAD, and take patient notes, describe images, and conduct measurements in a digital format. Radiologists can view current and prior images and reports, note details on the current exam, score lesion severity, and finalize reporting.

The new versions will begin shipping in the U.S. and internationally in the fourth quarter of 2008. The software has regulatory approval in the U.S., Canada, and China.