Can computer-aided detection (CAD) software developed with deep-learning technology perform better than mammographers? Yes it can, at least for the task of differentiating between benign and malignant calcifications, according to a team of researchers from California.

In a series of studies presented at ECR 2017 earlier this month and at RSNA 2016, researchers led by Dr. Alyssa Watanabe of the University of Southern California (USC) Keck School of Medicine shared how deep learning can yield higher sensitivity and specificity for both breast masses and calcifications, compared with traditional mammography CAD techniques, even identifying cancer in some cases years before radiologists did.

They also reported that the deep learning-trained CAD software could differentiate benign and malignant breast calcifications better than radiologists could on their own, offering the promise of sharply reducing the number of negative biopsies.

One thing's for sure: Artificial intelligence (AI) is going to change the future of mammography, Watanabe said in her talk at ECR 2017.

"This deep-learning CAD [software] is already superior to the radiologist in classification of malignant and benign calcifications," she said.

Deep learning

A type of machine learning, deep learning is the most popular form of AI and is used today in speech recognition technology, self-driving cars, and even by Netflix to recommend your next movie, Watanabe said. In radiology applications, the machine learns to recognize different features on images after biopsy-proven cases are entered into the algorithm. It can then predict probability for malignancy, she said.

The research group at USC has been assessing the performance of qCAD, a quantitative CAD software application developed by CAD software firm CureMetrix using deep learning. The group also includes Dr. William Bradley, PhD, from the University of California, San Diego School of Medicine, as well as employees from CureMetrix.

The CAD software makes use of a "random forest" technique, in which multiple deep-learning classifiers and astrophysics codes based on astrophysics equations are assembled as an ensemble of decision trees in a random forest framework. The mammogram data are cycled multiple times through the "forest" so that all of the decision trees are encountered, Watanabe said.

After processing the mammograms, the CAD software's mathematical classifiers predict the probability of malignancy for the calcifications. The software summarizes these mathematical predictions as a Q score; higher Q scores indicate a greater likelihood of malignancy.

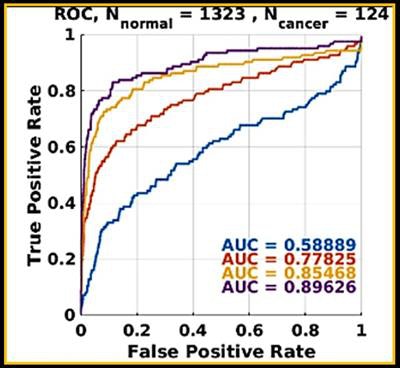

Before and after training the software with deep learning on 187 biopsy-proven cases, the researchers tested the CAD algorithm on 7,232 digital 2D screening mammograms and generated receiver operating characteristic (ROC) curves to assess its performance.

Prior to being trained with deep learning, the CAD algorithm had an area under the curve (AUC) of 0.884 for differentiating microcalcifications -- an AUC of 1 indicates perfect accuracy. Adding deep learning increased the AUC to 0.94, and subsequent refinements to the code that incorporated physics-based methods have since raised the AUC to 0.968, according to the group.

That's "quite outstanding and outperforms the CAD that's currently commercially available [based on published data submitted to the U.S. Food and Drug Administration]," Watanabe said.

By enabling the objective assessment of any changes seen on sequential mammograms, the software's quantitative scoring may also enhance radiologists' accuracy, she said.

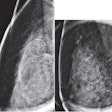

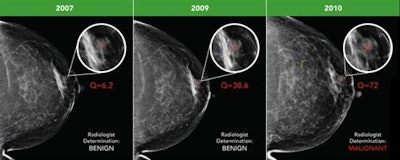

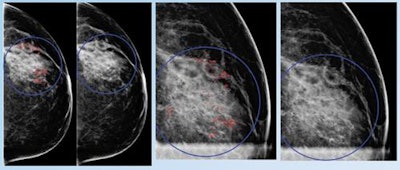

Early breast cancer was flagged by the CAD software three years prior to biopsy and showed a progressive increase in quantitative score over the three years. All images courtesy of Dr. Alyssa Watanabe.

Early breast cancer was flagged by the CAD software three years prior to biopsy and showed a progressive increase in quantitative score over the three years. All images courtesy of Dr. Alyssa Watanabe.In other results, the researchers found that progressive deep-learning training continued to improve the performance of the CAD software over time for breast masses.

Progressive machine learning also improved the software's accuracy for breast masses, as demonstrated by increasingly higher areas under the ROC curve after training.

Progressive machine learning also improved the software's accuracy for breast masses, as demonstrated by increasingly higher areas under the ROC curve after training."As more and more cases of biopsy-proven breast cancers and benign lesions are entered into the data bank, the CAD 'learned' to distinguish between these lesions with greater and greater accuracy," the authors wrote in their poster at ECR 2017. "The potential to enter massive amounts of ground truth cases could lead to greater and greater accuracy in flagging cancers and not flagging benign lesions such as fat necrosis."

Avoiding benign biopsies

While almost 2% of screening mammograms result in biopsy, up to 80% of those biopsies are benign. Decreasing the number of negative breast biopsies would benefit patients and be cost-effective; $4 billion is spent each year in the U.S. on mammography false positives, Watanabe said.

To see if the deep learning-trained CAD software could help avoid some of these unnecessary biopsies, the researchers used an enriched dataset of 391 mammograms with biopsy-proven calcifications (302 benign and 89 malignant) provided by two institutions: a community-based imaging practice and an academic radiology department. They then compared the CAD software's performance with the biopsy recommendations previously provided by the fellowship-trained breast imaging radiologists at the two sites.

At the first institution, the positive predictive value of breast biopsy could be increased from 32% to 56% with the use of CAD, she said. Furthermore, up to 63% of benign breast biopsies could potentially have been avoided if the CAD software's predictions were used.

| Performance of CAD software vs. radiologists by false-positive rates | |||

| Radiologists | CAD software (at 100% sensitivity threshold for cancer detection) | Potential reduction in breast biopsies from use of CAD software | |

| Academic radiology department | 80% | 35% | 57% |

"Over half of the breast biopsies that were recommended and performed by the academic radiologists could have been eliminated if the CAD had been utilized for the predictions," Watanabe said. "So, in other words, this CAD [software] is outperforming academic radiologists in recommendations for biopsying calcifications."

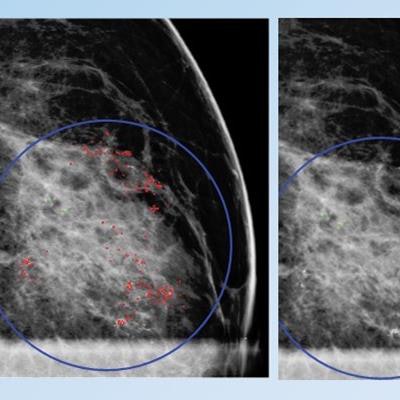

False positive for radiologist and true negative for qCAD. Fine linear branching and rim calcifications measuring 7 cm with regional distribution in the middle lateral region: path-proven fat necrosis.

False positive for radiologist and true negative for qCAD. Fine linear branching and rim calcifications measuring 7 cm with regional distribution in the middle lateral region: path-proven fat necrosis.With deep-learning training, the CAD software can recognize and eliminate benign lesions, she said.

"This dramatically reduces most false markings, which are a nuisance and distraction for mammographers," she said.