OSAKA - Spurred by the need for more advanced views of the human body and fueled by rapidly growing computing power, visualization and virtual reality in medicine are entering a period of accelerated advancement.

"We're in a very exciting generation in medical imaging," said Richard Robb, Ph.D., from the Mayo Clinic in Rochester, MN. "We've got very exciting opportunities to contribute to the well-being of humans around the world because of the advances in medical imaging we have available to us."

But even as image processing advances beyond traditional 2D and 3D visualization, there is plenty of unfinished business left to work on, including unmet needs in daily radiology practice. Segmentation and image fusion need work, to name two areas.

Robb offered a primer on image processing at the Computer Assisted Radiology and Surgery (CARS) meeting, which included later talks by Robb and his co-moderator Naoki Suzuki from the Jikei University School of Medicine in Tokyo. Some advanced imaging research covered in these discussions will be the focus of future articles.

Dealing with data

Modern biomedical imaging involves three stages: image acquisition, image processing, and application of those images -- all with the fundamental goal of helping patients, said Robb, who is a professor of computer science and biomedicine and director of biomedical imaging at the Mayo Clinic College of Medicine.

Acquiring image data involves various data sources, different geometric configurations, and reconstructions for projections. The images then undergo a processing phase, though in radiology the raw image data are sometimes still passed directly to the physician.

As image processing has advanced to 3D, 4D, and beyond, however, processing has become more useful and more important. The processing phase includes enhancing images, segmentation of regions of interest, fusing multimodality images, and rendering them in novel ways.

"Importantly, the images become measurements," Robb said. "They may be subjective measurements, mental measurements, but more and more they're becoming actual quantitative measurements about what's going on with the patients. Those images and measurements can then be used to guide interventional procedures and to intervene effectively."

What's a dimension?

Progress among the people who design the processing methods always involves a feedback feed-forward loop that fosters further evolution, Robb said. Imaging planes are one way to look at this evolution.

- The 2D standard, on the x- and y-axes, is still widely used in medical imaging.

- 3D, which adds depth to include the x-, y-, and z-axes, came about due to CT, and provides actual spatial anatomy.

- The 4D standard (x,y,z,t) combining space and time, is now emerging thanks to the development of fast scanners.

- On the horizon is 5D and beyond (x,y,z,t,f), which combines space, time, and any variety of functions such as temperature that can be measured together.

"These (additional) functions may not be true dimensions in the mathematical sense," Robb said. "But in the imaging sense, I think that as we add independent variables or variables associated through space or time or both, in the sense of the image, we can think of that as added dimensionality where we have some functionality."

Thus, dimensions in the 5D model might include temperature, pressure, elasticity, or any other phenomenon that describes a process in the Fourier space. Several additional functions might also be added if they can be simultaneously measured or registered in space or time.

When additional dimensions cannot be simultaneously captured, then they are more properly categorized as "hyperspace" or N-dimensional images (x,y,z,t, f1…..fn), Robb said, cautioning that the definitions are imperfect.

- Different dimensions may not be synchronous (e.g., related images over time).

- Change is both a confounding problem and an important dimension.

- Functions may not always be entirely independent of one another.

What has evolved in medical imaging is a wonderful range of spatial and functional dimension space in medical images, he said. CT and ultrasound systems allow us to look at anatomy and physiology, MRI looks at anatomy in elegant ways that are different from the other modalities, and nuclear medicine has opened a window on functional and molecular processes. The ultimate goal may well be chemistry -- what is happening chemically in each cell, tissue layer, and molecule is critically important, Robb said.

In the final analysis, imaging represents a continuum that can all be made available to us: anatomy, physiology, chemistry and molecular processes, he said.

Segmentation not there yet

The most compelling work going on in imaging today is in image enhancement and processing, Robb said. And image segmentation is the biggest unsolved problem.

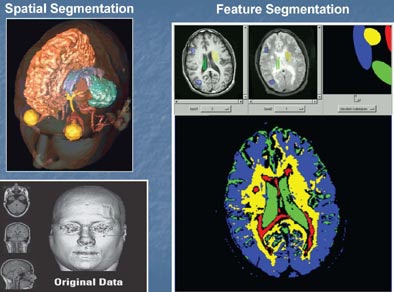

To be sure, there are "some segmentation successes in some modalities for some organs and some algorithms," he said, but there is no single algorithm that offers a complete solution to the segmentation problem. In particular, automated segmentation would be useful; everyone wants a computer smart enough to segment out regions of interest automatically, but a solution is not at hand. Current areas of research include spatial segmentation and feature segmentation, meaning tissue classification.

Better solved is the problem of image registration and image fusion, Robb said. But there's still room for improvement in the accuracy of image registration, both intramodality and cross-modality.

|

| Spatial and feature segmentation are two useful forms of volume segmentation. Images courtesy of Richard Robb, Ph.D., and Mayo Clinic College of Medicine. |

Virtual-to-physical image registration, such as rendering images to the patient, enables the use of preoperative imaging information in an interventional procedure. Intramodality registration, such as combining T1 and T2 MRI images, is another focus of researchers.

Facing fusion

In his presentation, Robb also discussed volume image fusion. "I separate volume image fusion from the registration problem, although registration is a prerequisite for fusion," he said. "Basically, we mean fusing structure to structure -- that is, one kind of anatomy to another kind of anatomy -- (for example) CT of the bone and MRI of the brain, registering those and fusing them to see them together in a useful way. "

|

| Volume fusion. Images courtesy of Richard Robb, Ph.D., and Mayo Clinic College of Medicine. |

Structure-to-function is another kind of volume image fusion, combining anatomy and physiology, such as MRI and PET, he said.

Robb and his colleagues define image fusion as "the integration of multiple scalar-voxel images into a single vector-voxel image, involving correction of any position, orientation, scaling or sampling differences among separate images, thus producing a coherent 'hyperimage' potentially more useful for quantitative classification and analysis, than any or all component images combined."

This definition offers the opportunity to fuse images, but how are they best displayed? Take a multimodality image of the brain: CT plus T1and T2 MRI, fused with a rigid registration algorithm. If you assign the color red to the CT, blue to the T1, and green to the T2 together in an RGB-rendered image, you get a vector image with separate but usable information from each modality, Robb said.

"At each point in space in the tissue in the brain we have three measures: We have the number from the CT, we have the number from the T1, and we have the number from the T2," he said. "All three of those together tell us something about that little piece of tissue, and therein lies the power of the vector-voxel image, as we have more information about each pixel if the registration is accurate and reproducible than we have with any one of these measures alone. And that means that we might be able to much better classify the tissues, differentiate abnormality from normality, differentiate grade of pathology, and so forth."

Thus, the whole is more than the sum of its parts, and images are most useful when assessed quantitatively. But beyond three dimensions things get a lot harder, which is why image fusion is one of the great computer science problems of the day, he said.

At their core, images are measurements and it is as measurements that they are most useful, Robb said. Certainly they can be interpreted subjectively very well and for many reasons, but quantitative analysis is being realized more and more. The initial promise of CT was quantitative, he said -- for the first time the images had HU values attached to the tissues, but the promise is still not fully realized, and that should be the goal.

Robb also discussed some important imaging terms, such as modeling. Statistical, spatial, and deformation modeling have become very important, particularly in developing interventional guidance techniques, he said.

Image filtering and enhancement have important roles in imaging as well, enabling the viewer to see elements within the image data that would not otherwise be seen -- for example, the enhancement of pulmonary structures in CT data (AHE), or the correction of MR field bias (convolution filters). Filtering is not a linear process; it is a display process and therefore irreversible, Robb said.

|

| Image filtering and enhancement. Images courtesy of Richard Robb, Ph.D., and Mayo Clinic College of Medicine. |

Volume image rendering is a problem that is largely solved. Still needing improvement in this area, though, are fusion applications to render them interpretable, he said. And in tensor fusion imaging, viewing methods better than tractography need to be developed, for example.

"We are producing clinically very useful images with our volume rendering algorithms and they are very fast," Robb said. With the right algorithms and today's powerful PCs, volume rendering can be achieved without image accelerators.

"Tissue maps are reproducible across a spectrum of images, and that's where some of the important research in realistic tissue rendering is taking place today," he said

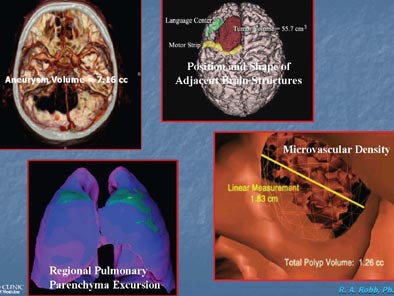

Quantitative analysis can include measuring volumes and adjacency, finding where critical structures are located compared to pathological structures, and using parametric mapping to look at colors to identify change -- for example, over the entire respiratory cycle. It can also mean examining the blood supply of a polyp in virtual colonoscopy to better assess the need for its removal.

|

| Quantitative volume analysis. Images courtesy of Richard Robb, Ph.D., and Mayo Clinic College of Medicine. |

Volume image modeling means patient-specific modeling, Robb said. "We have the power now because of fast scans, fast rendering, fast reconstruction, reasonable segmentation and registration algorithms to make patient-specific models ... that are geometrically, technically, and functionally accurate. They're in the computer and they can tell us something about anatomy, pathology, and physiology."

The goals of making models is to go from voxels to polygons, such as going from solid voxel representation to surface or solid models that are patient-specific and generally created with less data than the original acquisitions. If carefully done, they can be extremely accurate and useful tools for looking at the interior or the exterior, and they are ideal for training and education.

|

| Parametric displays use shape, colors, and textures to distinguish tissue properties. Bone mineral density of the femoral bone is higher in the 29-year-old man (right) compared to the 95-year-old man (left). Images courtesy of Richard Robb, Ph.D., and Mayo Clinic College of Medicine. |

"If we have models, we have surfaces and we can map onto those surfaces other kinds of useful information about structure or function in a quantitative way through parametric displays," he said. Structure-feature fusion models add function to anatomy through time.

By Eric Barnes

AuntMinnie.com staff writer

July 4, 2006

Related Reading

3D lab efficiency may depend on who's in control, June 22, 2006

Technologists take advantage of 3D opportunity, April 25, 2006

Centralized 3D labs hold benefits for community practices, July 18, 2005

Start-up 3DR aims to outsource 3D processing, July 1, 2005

Integrated 2D/3D offers workflow, clinical gains, June 17, 2005

Copyright © 2006 AuntMinnie.com