An image processing technique forged in Japan could someday make wireless capsule endoscopy (CE) more useful by analyzing and cobbling together the somewhat unpredictable sequence of small intestine images generated by the tiny capsule endoscopy cameras. The method isn't nearly ready for clinical use, but the first experiments have already conquered several technical hurdles.

Considering the difficulty of evaluating the small intestine noninvasively, there was hope when capsule endoscopy was introduced in 2001 that the modality would become a useful adjunctive tool for evaluating small intestine disease.

And capsule endoscopy is useful -- aside from the fact that the images acquired by its tiny camera are notoriously error-prone and time-consuming to evaluate, requiring the review of hours of images with a limited field-of-view.

There is no question that capsule endoscopy finds significant disease easily and noninvasively, but it needs improvement. There's no predicting which way the camera will point as it moves through the digestive tract via peristalsis, for example. The technology also misses lesions when the camera doesn't happen to aim itself toward them as it passes through the small intestine.

And there's no easy way to connect the seemingly random snapshots, acquired at a rate of about two frames per second. This is the problem the researchers addressed in their study, which was presented at the 2009 Computer Assisted Radiology and Surgery (CARS) meeting in Berlin.

Considering the complex shape of the small intestine and the limited field-of-view, only a small portion of the gastrointestinal tract is visible at once, rendering visual control difficult for the physician, said Dr. Khayrul Bashar in his talk.

Bashar, along with Kensaku Mori and colleagues from Nagoya University in Japan, found that stitching endoscopic images together into mosaics can expose mucosal surfaces more accurately for visual inspection.

"If we can generate a mosaic image of the gastrointestinal tract, then it may be feasible for specialists and doctors to diagnose disease" more quickly and accurately, Bashar said in his talk.

|

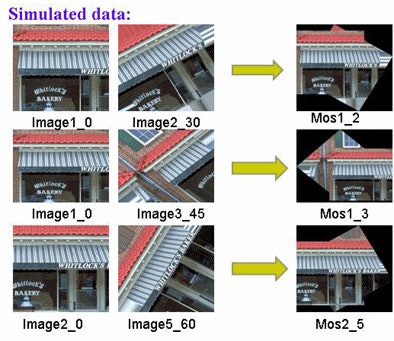

| Simulated results using images of buildings illustrate the mosaic process in well-delineated structures. |

Choosing a workable motion model was the first challenge. Difficulties included moderate-to-large overlaps between consecutive image frames, a lack of stable high-level or low-level features, and nonuniform illumination and blurring artifacts, Bashar said.

There are a number of registration methods in use, including intensity-based registration, frequency-based registration, and feature-based methods, but these are unsuitable for wireless capsule endoscopy because each image is so different, he said.

"We cannot apply the feature-based registration techniques because there are no stable features, not even low-level features," Bashar said. "We also have problems of blurring because the capsule is moving, and also the tissue is contracting and expanding, so it's a difficult job to do the registration."

In addition to deformation by translation and rotation, the images also overlap by more than 40%, Bashar said. Because rotation and translation effects are dominant in capsule endoscopy images, the team chose Euclidean transformation as the motion model.

Due to the constant motion of the organ, overlapping, and the unavailability of stable features, the group settled on a Fourier phase correlation-based approach to estimate the transformational parameters. The feature extraction and registration techniques are applied to establish geometric correspondence between the images, Bashar explained.

The Fourier shift theorem holds that if the origin of a function is translated by certain units from another function, the transformation appears in the phase of a Fourier transform. Thus, if two images f1(x, y) and f2(x,y) are related by translation, the phase difference is equivalent to the phase of the cross power spectrum.

This concept is ideal for determining the optimal alignment and rotation of images because it can be applied to even small differences between two images, Bashar said. In a pair of images where "the second image is shifted horizontally from the first image, if you apply the Fourier transform there is a peak exactly at the point of translation," or the point at which the images intersect, guiding the registration.

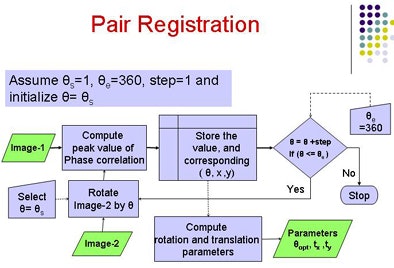

When an image pair presents both a translation and a rotation problem to solve, it is possible to rotate one image with respect to another and compute the phase correlation repeatedly to come up with the optimal rotation and translation parameters simultaneously. A simplified procedure based on research by Probhakara et al (2006) was used to estimate the phase correlation parameters.

|

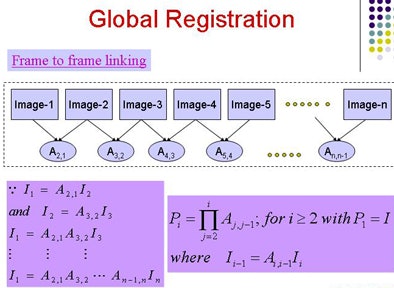

| Fourier transform-based registration includes pairwise registration and global registration. Pairwise registration (above) computes the translation parameters for various rotations and optimization, then computes the transformation matrix. Global registration (below) links the combined images into a single panorama. |

|

The next step is to line up all of the images in a dataset using global registration, with a common coordinate system. Then a pairwise blending technique is used to composite a sequence of frames and compute the transformation matrix.

A stitch in time

Finally, a frame-to-frame linking process is used to produce a globally consistent mosaic, Bashar explained. The five steps include:

- Use of the first image as reference

- Following frame-to-frame alignment, computation of global transformation matrix for current test frame

- Determining target size to be aligned

- Aligning image to its target by mapping the pixels onto a reference coordinate system

- Applying superimposing or blending technique to the overlapping regions

- Applying process to subsequent images

"The purpose of stitching is just to overlay the images onto a bigger canvas," he said. "Redundancy of the pixels can be reduced and it looks very pleasant."

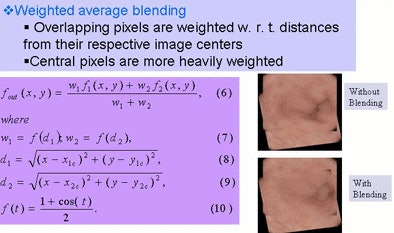

Shortcomings remain, especially at the image boundaries. "It's not smooth enough because of the registration error caused by the incompatible motion model presumption and also the diameter of the images," Bashar said.

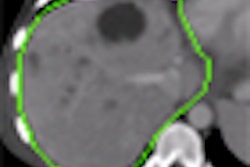

|

| Pairwise blending is used to composite a sequence of frames and compute the transformation matrix, minimizing seams found at the edges of the coregistered images. |

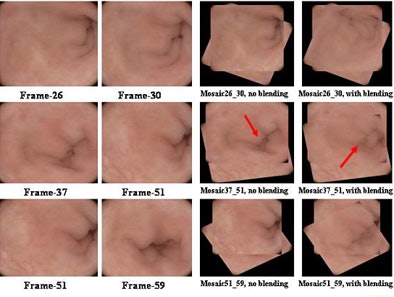

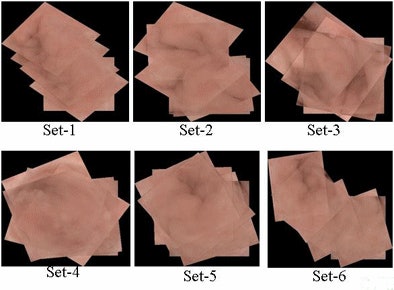

In the study, the techniques were applied to six sets of capsule endoscopy video, each consisting of six frames provided by Olympus (Tokyo). The image size was 288 x 288 pixels each, and images were converted to grayscale before the application of Fourier transform.

The results show that blending reduced the seams at image borders, and the experimental study demonstrated the feasibility of combining and stitching together capsule endoscopy images, Bashar said.

In addition, registration parameters assuming rigid body motion were estimated by means of Fourier phase correlation and frame-to-frame linking, and then a pairwise blending technique was applied to reduce visible seams in the generated mosaic and create a flat panorama, he said.

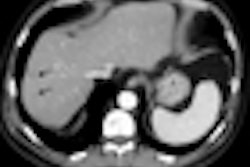

|

| Final results in real capsule endoscopy data show alignment and stitching of images before and after blending is applied (above) and image processing results in six datasets (below). |

|

"The results are still very preliminary, although promising," Bashar said. As for limitations, the current motion model includes only basic geometric deformations, and global registration parameters are not accurate enough. "We need to adopt a more complex motion model and see what happens," he said.

There are also inaccuracies in the global registration parameter estimation methods, and the frame-to-frame linking method incorporates some errors, Bashar said.

Additional steps include integrating a nonrigid registration technique to minimize local misregistration and developing a standard devolution technique, Bashar said.

A more sophisticated blending method will also be explored to remove blurring effects and exposure compensation, and nonrigid registration techniques will also be investigated, he said.

By Eric Barnes

AuntMinnie.com staff writer

August 31, 2009

Related Reading

Virtual gastroscopy distinguishes benign from malignant ulcers, July 21, 2009

Capsule endoscopy lacks sensitivity of colonoscopy, July 16, 2009

Video capsule endoscopy successful in the aged: study, October 24, 2008

Capsule endoscopy image quality not improved with oral bowel prep, July 9, 2008

Copyright © 2009 AuntMinnie.com