An artificial intelligence (AI) deep-learning algorithm performs comparably to radiologists when it comes to interpreting wrist x-rays, according to a study published online March 9 in the European Journal of Radiology.

The findings suggest that AI could offer an effective way to pinpoint these fractures, wrote a team led by Dr. Christian Blüthgen of the University Hospital of Zurich in Switzerland.

"The deep-learning system was able to detect and localize wrist fractures with a performance comparable to radiologists, using only a small dataset for training," the group wrote.

Wrist fractures are quite common across a range of patient ages, and x-ray is the fastest, most cost-effective imaging modality for initial assessment. Deep-learning algorithms have been shown to improve the detection of fractures in upper and lower extremities, and Blüthgen's group investigated whether they could also improve detection of fractures of the wrist.

The team trained a deep-learning system consisting of two models with 524 wrist x-rays that had been performed between April and July 2017. Of these, 166 showed fractures (31.7%). The group then tested the system using an internal test set of 100 x-rays (42 with fractures) and an external test set of 200 x-rays (100 with fractures); the external set came from MURA, a dataset of musculoskeletal radiographs collected by Stanford University that consists of 40,561 images.

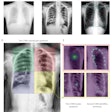

Three radiologist readers (two attendings, one resident) also interpreted the x-rays, indicating the location of any fractures by marking regions of interest. The deep-learning system used scoring between 0 ("intact") and 1 ("defect") and created heat maps to identify fractures. The investigators then assessed the performance of the deep-learning system using the area under the receiver operating curve (AUC) measure, sensitivity, and specificity.

The investigators found that the deep-learning system showed excellent performance on the internal test set, but its performance decreased on the external set, perhaps due to "overfitting," that is, the tendency of these algorithms to work better with images on which they were trained, Blüthgen told AuntMinnie.com via email. Radiologist readers' performance was comparable on the internal set and better on the external set.

| Performance of deep learning for wrist fractures on x-rays | ||

| Perfomance measures | 3 radiologist readers | Deep-learning system (2 models) |

| Internal test set | ||

| AUC | N/A | 0.95 - 0.96 |

| Sensitivity | 86% - 90% | 81% - 90% |

| Specificity | 62% - 97% | 97% - 100% |

| External test set | ||

| AUC | N/A | 0.87 - 0.89 |

| Sensitivity | 98% | 80% - 82% |

| Specificity | 76% - 94% | 78% - 86% |

The performance of the deep-learning system was comparable to that of the radiologist readers, but more work needs to be done to assess its generalizability, Blüthgen said.

"To date, a lot of data-driven medical imaging studies have not incorporated an external validation dataset when reporting the diagnostic performance of their systems," he said. "As our study shows, a high diagnostic performance on one dataset does not necessarily guarantee a good generalizability. We consider this an important finding that should be taken into account in comparable studies in the future."