In PET imaging, the amount of radiotracer dose correlates with the level of image quality. But what if artificial intelligence (AI) could enable PET to be performed using only 1% of current dose levels -- speeding exam times, decreasing radiation exposure, lowering costs, and alleviating shortages in radiotracers?

Researchers from Stanford University may have taken a step toward accomplishing that goal. They trained a deep-learning algorithm to process ultralow-dose PET image data and then create synthetic images that approximate PET scans acquired using a standard radiotracer dose. The synthetic images yielded comparable image quality to standard full-dose exams, according to lead author Enhao Gong.

"We can push the [radiotracer] dose reduction for PET to even more than 100x (99% dose reduction) and even 200x (99.5% dose reduction) as tested on FDG-PET/MRI neuro datasets," Gong said.

He presented the research, which received a student travel stipend award, at the recent RSNA 2017 meeting in Chicago.

Addressing PET's limitations

While PET has been shown to offer great clinical value in oncology and is the gold standard for imaging neurological conditions such as Alzheimer's disease, the powerful molecular imaging modality does have some limitations. It is time-consuming to perform and expensive; an amyloid radiotracer for Alzheimer's disease can cost more than $2,000 a dose -- and even more if paid for out of pocket, according to Gong. In addition, patients often have to wait weeks to get a PET scan due to a shortage of tracers needed for these exams.

What's more, the long scanning time involved in PET studies can result in more motion artifacts, which can blur PET imaging information and hinder quantitative measurement and accurate delineation of the lesions and tumors, he said.

"Therefore, we want to significantly enhance image quality with low-dose statistics (i.e., less use of radiotracer and/or faster acquisition), which can transform PET into a modality like low-dose CT that is faster, cheaper, safer, and more accessible to patients," Gong told AuntMinnie.com.

Training an AI model

To help achieve this goal, the Stanford researchers developed a deep-learning model called a convolutional encoder-decoder residual network to process ultralow-dose PET images. After receiving a noisy low-dose PET image patch as an input, the algorithm conducts patch-to-patch regression tasks and outputs a high-quality image patch to approximate the corresponding patch that would be produced in a full-dose PET study. The model then completes the reconstruction by concatenating and averaging overlapping patches to produce a synthetic image that offers comparable image quality to the full-dose image, according to the researchers.

The group trained and evaluated the algorithm using brain PET/MR datasets from seven patients with recurrent glioblastoma. All patients received a full FDG dose of 10 mCi. The full-dose reconstructions -- 3D-ordered subset expectation maximization (OSEM) algorithms with two iterations and 28 subsets -- were used as the gold standard for the purposes of the study. Low-dose PET images were created by removing counts from the listmode data and were reconstructed using various dose-reduction factors, according to the authors.

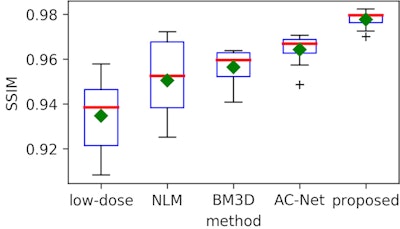

The deep-learning network was trained on a subset of slices from three of the seven patients and included data augmentation techniques consisting of approximately 10,000 patches, two image flips, and four image rotations. It was then tested on all slices from the seven patients that had not been used for training. The results were compared with the reference standard, as well as with other proposed low-dose PET enhancement algorithms.

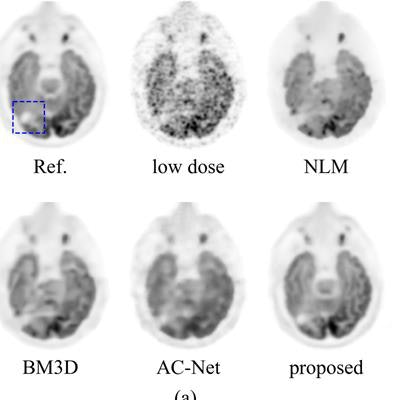

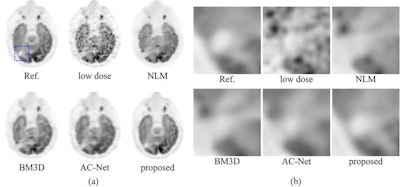

Comparison of the Stanford deep-learning method with existing low-dose PET enhancement algorithms. The full-dose reference PET image is shown on the upper left, followed by the 200x lower-dose image and the results of different image denoising/restoration methods such as non-local-mean (NLM) denoising, block-matching and 3D filtering (BM3D) denoising, a previously proposed deep autocontext convolutional neural network (AC-Net), and the proposed Stanford model. The Stanford researchers believe visual comparison of both the entire image (a) and the zoomed-in regions (b) show the superior image quality restoration of their algorithm. All images courtesy of Enhao Gong.

Comparison of the Stanford deep-learning method with existing low-dose PET enhancement algorithms. The full-dose reference PET image is shown on the upper left, followed by the 200x lower-dose image and the results of different image denoising/restoration methods such as non-local-mean (NLM) denoising, block-matching and 3D filtering (BM3D) denoising, a previously proposed deep autocontext convolutional neural network (AC-Net), and the proposed Stanford model. The Stanford researchers believe visual comparison of both the entire image (a) and the zoomed-in regions (b) show the superior image quality restoration of their algorithm. All images courtesy of Enhao Gong.The algorithm was able to produce comparable image quality to conventional studies at more than 99% lower radiotracer dose reduction levels for PET, and even 99.5% lower dose for brain FDG-PET /MRI, according to the researchers.

"We show further improvement [in dose reduction] by exploiting multimodality information from simultaneous PET/MRI acquisition and using the advanced deep-learning models," he said.

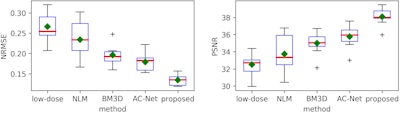

A quantitatively superior method

After performing quantitative image analysis, the researchers determined that their method reduced root-mean-square error (RMSE) by more than 50% and gained 7 dB in peak signal-to-noise ratio (PSNR), compared with the original ultralow-dose images. It also produced a higher structural-similarity (SSIM) index percentage than the reference full-dose image.

What's more, the algorithm achieved a similar contrast-to-noise ratio (CNR) -- an image quality measure -- to the reference full-dose image, the researchers said.

Gong acknowledged that existing algorithms can conduct denoising and remove artifacts to improve medical image quality. However, these previous methods have been shown to reduce dose only by as much as around 75%, he said. Furthermore, most of these methods use an iterative reconstruction approach and are time-consuming and difficult to tune.

In contrast, the researchers' proposed deep-learning method does not use iteration and takes less than 100 msec per image to perform, Gong said.

He said that their method also works on full-body PET/CT exams, although more studies are needed to evaluate the level of radiotracer dose reduction that can be achieved. They have currently explored the potential for 80% dose reduction.

The algorithm is currently being evaluated in clinical studies in support of an application for U.S. Food and Drug Administration approval, he said. Gong and colleague Dr. Greg Zaharchuk from Stanford Radiology are licensing the technology from Stanford to commercialize it with an AI startup called Subtle Medical.

Future work

The researchers have also developed a deep-learning algorithm that can significantly improve the image quality and reconstruction of MRI and other imaging modalities, Gong said. This approach involved state-of-the-art network architecture and a generative adversarial network -- a deep-learning approach that has been used to produce fake photographs, he said.

"Instead of generating realistic faces [for fake photos], we used the idea and developed a deep-learning algorithm to intelligently enhance the image while preserving realistic and important structural/functional information," Gong said. "The deep-learning algorithm is based on a multiscale convolutional neural network that predicts the enhanced images -- instead of just a label -- from input images."