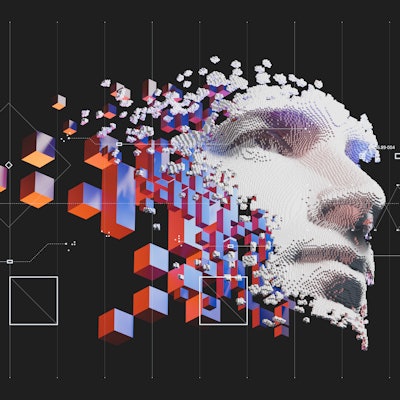

Artificial intelligence (AI) models can recognize a patient's racial identity on medical images, even though radiologists can't, according to a new study that could have significant implications for how AI is trained and adopted in radiology.

In a preprint paper published July 21 on arXiv.org, a group of researchers assembled by Dr. Judy Gichoya of Emory University in Atlanta shared results from dozens of experiments performed at multiple institutions around the world that found extremely high accuracies -- up to an area under the curve (AUC) of 0.99 -- for deep-learning models in recognizing a patient's self-described racial identity from radiology images.

The models demonstrated consistently high performance across multiple imaging modalities and anatomical locations -- including chest x-rays, mammography, limb radiographs, cervical spine radiographs, and chest CT data -- on a variety of different patient populations and even on very low-quality images. But the researchers were unable to ascertain how the algorithm learns to predict racial identity.

Although the study still needs to undergo peer review, its findings raise troubling concerns. AI's capability to predict self-reported race isn't the most important issue, according to the researchers.

"However, our findings that AI can trivially predict self-reported race -- even from corrupted, cropped, and noised medical images -- in a setting where clinical experts cannot, creates an enormous risk for all model deployments in medical imaging: If an AI model secretly used its knowledge of self-reported race to misclassify all Black patients, radiologists would not be able to tell using the same data the model has access to," the authors wrote.

As this capability was easily learned, it's also likely present in many image analysis models, "providing a direct vector for the reproduction or exacerbation of the racial disparities that already exist in medical practice," the researchers said.

Extensive training, validation

To assess the capability of deep-learning algorithms to discover race from radiology images, the researchers first developed convolutional neural networks (CNNs) using three large chest x-ray datasets (MIMIC-CXR, CheXpert, and Emory-CXR) with external validation. They also trained detection models for non-chest-x-ray images from multiple body locations to find out if the model performance was limited to chest x-rays. In addition, the authors investigated whether deep-learning models could learn to identify racial identity when trained to perform other tasks, such as pathology detection and patient reidentification.

Each of the datasets included Black/African American and white labels; some datasets also included Asian labels. Hispanic/Latino labels were only available in some datasets and were coded heterogeneously; as a result, patients with these labels were excluded from the analysis.

Highly accurate predictions

In internal validation, the deep-learning algorithms for chest x-rays produced AUCs ranging from 0.95 to 0.99. They also achieved AUCs ranging from 0.96-0.98 in external validation.

Algorithms developed for detecting race on for other modalities yielded AUCs, for example, ranging from 0.87 on external validation on chest CT studies, 0.91 for limb x-rays, 0.84 for mammography, and 0.92 for cervical spine x-rays.

"Every radiologist I have told about these results is absolutely flabbergasted, because despite all of our expertise, none of us would have believed in a million years that x-rays and CT scans contain such strong information about racial identity," according to a blog post by co-author Dr. Luke Oakden-Raynor of the University of Adelaide in Australia.

In an effort to find the underlying mechanisms or image features utilized by the algorithms in its racial identity predictions, the researchers investigated several hypotheses, including differences in physical characteristics, disease distribution, location-specific or tissue-specific phenotype or anatomic differences, or cumulative effects of societal bias and environmental stress. However, all of these confounders had very low predictive performance on their own.

Surprising results

The study results are both surprising and very bad for efforts to ensure patient safety, equity, and generalizability of radiology AI algorithms, Oakden-Raynor said.

"The features the AI makes use of appear to occur across the entire image spectrum and are not regionally localized, which will severely limit our ability to stop AI systems from doing this," he wrote.

Incredibly, the model was able to predict racial identity on an image that had the low-frequency information removed to the point that a human couldn't even tell the image was still an x-ray, he said.

In other findings, the experiments revealed that despite the distribution of diseases in several datasets being essentially non-predictive of racial identity, the model learns to identify patient race almost as well as models optimized for that purpose, according to Oakden-Raynor. AI seems to easily learn racial identity information from medical images -- even when the task seems unrelated, he noted.

"We can't isolate how it does this, and we humans can't recognize when AI is doing it unless we collect demographic information (which is rarely readily available to clinical radiologists)," he wrote.

That's bad, but even worse is that there are many algorithms on the market for chest x-ray and chest CT images that have been trained using the same datasets used in their research, he said.

"Our findings indicates that future medical imaging AI work should emphasize explicit model performance audits based on racial identity, sex, and age, and that medical imaging datasets should include the self-reported race of patients where possible to allow for further investigation and research into the human-hidden but model-decipherable information that these images appear to contain related to racial identity," the authors wrote.

The paper has been submitted to a medical journal but has not yet been peer-reviewed. In the meantime, the researchers said they are hoping for additional feedback on improving the study.