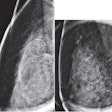

Will artificial intelligence (AI) lead radiologists to begin overcalling potential abnormalities during image interpretation? Not necessarily, if a recent study that assessed the impact of computer-aided detection software on patient recall rates in screening mammography is any indication.

Researchers from the Medical College of Wisconsin retrospectively reviewed three years of screening mammogram interpretations by four radiologists who read screening mammograms at one practice that used CAD and one hospital that did not. The aggregate recall rate for the four radiologists was slightly higher at the site with CAD, but the difference did not reach statistical significance.

"These data suggest that CAD technology did not contribute to an elevated recall [rate] through the power of suggestion," co-authors Dr. Colin Hansen and Dr. Melissa Wein wrote in their educational exhibit at the recent American Roentgen Ray Society (ARRS) annual meeting in Washington, DC.

Impact on behavior

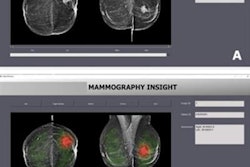

AI applications are increasingly becoming present in the professional lives of radiologists, and this includes CAD software to supplement interpretation of screening mammography, according to the authors.

"The best AI technologies assist radiologists in decision-making by providing objective data to arrive at more accurate and timely diagnoses," they wrote.

Once a radiology practice decides to adopt AI technology, however, it can be difficult to assess whether the technology is positively or negatively affecting radiologist behavior. To gain some insight into how AI can influence radiologist decision-making, the researchers sought to determine if the same radiologists would have different recall rates depending on whether they used mammography CAD software. The research opportunity presented itself after their group had started covering an outside community mammography practice in 2013 that used CAD software (iCAD); their hospital practice at the time did not use CAD, Wein said.

"It seemed like an easy measure to take a look at in our practice to see if CAD might be increasing our recall rates," she told AuntMinnie.com. "If that were the case, a discussion of how we were using or being influenced by the CAD software would have been warranted, as unnecessary recalls can ultimately cause harm to our patients."

The researchers retrospectively acquired data from a Mammography Quality Standards Act (MQSA) database over a three-year period for a group of four radiologists who read screening mammograms both locations. They then calculated the total number of screening mammograms acquired at both sites, as well as the number of screening studies given a BI-RADS category 0 result, indicating the patient would be recalled for further evaluation.

"I thought that perhaps the 'power of suggestion' with CAD might result in increased callback rates at the community site -- especially since we had previously not been using CAD at our hospital site," Wein said. "So the software was a new addition for all of us reading at both sites."

No effect on callbacks

The aggregate recall rate was slightly higher at the practice using CAD than in the non-CAD location, but this difference was not statistically significant, according to the researchers:

| Overall patient recall rate by use of CAD | ||

| CAD site | Non-CAD site | |

| Screening mammograms | 4,874 | 7,946 |

| BI-RADS category 0 | 545 | 876 |

| Recall rate | 11.8% | 11% |

"Interestingly, one [radiologist] had nearly identical recall rates at both sites, while one had higher recall rates at the CAD site, and the other had higher recall rates at the non-CAD site," Wein said. "The [radiologist] who read the fewest CAD cases at the new CAD site during this period had the highest recall rate using CAD, so perhaps this suggests there is an initial learning curve when using CAD software. Or that different [radiologists] will respond differently to CAD and its suggestions."

AI should assist radiologists in identifying potential pathology while not causing them to overcall potential abnormalities, Wein noted.

"CAD should not add an inordinate amount of additional time to or confusion about our interpretations, and any software that results in significant additional unneeded work should be re-evaluated, fine-tuned, or scrapped entirely," she said.

Future research projects could include evaluating historical data to assess whether the use of CAD assists the group in detecting earlier-stage cancers, according to the researchers.

"We have now adopted CAD at our hospital site as well, and evaluation of a larger pool of MQSA [data] will provide interesting insight regarding if CAD adds value to our interpretations," Wein said. "Additionally, we could look at a false-negative rates now that we have adopted CAD across our practice; is it possible that [radiologists] might become overdependent on CAD and miss potential pathology if it is not picked up by CAD? Finding the best ways to design and modify artificial intelligence to optimize radiologists' effectiveness is the ultimate goal."