Microsoft's Kinect motion-sensing gaming device is reliable for surgeons to use to manipulate MR images on operating room (OR) workstations, according to research from the Purdue University Department of Industrial Engineering.

A team led by doctoral student Mithun George Jacob concluded that gesture interaction and surgeon behavior analysis can facilitate accurate navigation and manipulation of MR images, opening the door for the replacement of traditional keyboard and mouse-based interfaces.

"The main finding is that it is possible to accurately recognize the user's (or a surgeon's in an OR) intention to perform a gesture by observing environmental cues (context) with high accuracy," the authors wrote.

Imaging devices are accessible in the OR today, but they use traditional interfaces that can compromise sterility. While nurses or assistants can use the keyboard for the surgeon, that approach can be cumbersome, inefficient, and frustrating, according to the researchers (Journal of the American Medical Informatics Association, December 18, 2012).

"This is a problem when the user (surgeon) is already performing a cognitively demanding task," they wrote.

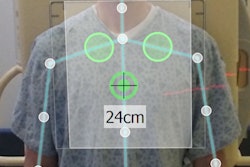

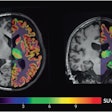

In a three-step project, the researchers first developed an MR image browser application and conducted an ethnographic study with 10 surgeons from Purdue's College of Veterinary Medicine to gather a set of gestures that would be natural for clinicians and surgeons. Ten gestures were ultimately selected for use by the browser.

Next, the team developed gesture recognition software that used the OpenNI software library to track skeletal joints using Kinect. System evaluation was then performed via three experiments.

In the first experiment, the researchers collected a dataset of 4,750 observations from two users. Gesture recognition was evaluated in a second experiment using a dataset of 1,000 gestures performed by 10 users.

The final experiment involved users performing a specific browsing and manipulation task using hand gestures with the image browser.

In the first experiment, the researchers found that their system was accurate in recognizing the gestures, with a mean recognition accuracy of 97.9% and a false-positive rate of 1.36%.

The second experiment showed a gesture recognition accuracy of 97.2%, while the third experiment concluded that the system could correctly determine user intent 98.7% of the time. Mean gesture recognition accuracy was 92.6% with context and 93.6% without context.

It's imperative to collect training data from more users, the authors noted, as people perform gestures differently and exhibit intent to use the system in different ways.