Deep-learning algorithms based on grayscale and color Doppler ultrasound images may predict ovarian malignancies with performance comparable to human experts, according to Chinese research published April 12 in Radiology.

A team led by Dr. Hui Chen and Bo-Wen Yang from Shanghai Jiaotong University also found that its algorithm can differentiate between malignant and benign tumors with high specificity and sensitivity.

"Our results suggest that targeted deep-learning algorithms could assist practitioners of ultrasound, particularly those with less experience, to achieve a performance comparable to experts," Chen and colleagues wrote.

Ovarian cancer is the second most common cause of cancer-related death worldwide among women. With a five-year survival rate of less than 45%, early and accurate detection of ovarian tumors is important for subsequent treatment.

Ultrasound is typically used as the first line of imaging for these tumors. The researchers tout ultrasound's convenience and low cost compared with other imaging modalities. To help classify tumors, the American College of Radiology has a set of guidelines to help experts with risk stratification and management called the Ovarian-Adnexal Reporting and Data System (O-RADS).

Deep-learning algorithms have been explored by radiologists in recent years for their potential ability to learn different tumor features. However, data is limited when it comes to ovarian tumors, which are mainly based on single-modality ultrasound images. Typically, ovarian cancer imaging uses multiple types of ultrasound images, including grayscale, color Doppler, and power Doppler images.

Chen et al developed two deep-learning algorithms to automatically classify ovarian tumors as benign or malignant through grayscale and color Doppler ultrasound images. The group also wanted to compare the performance of their algorithm to O-RADS and subjective expert assessment.

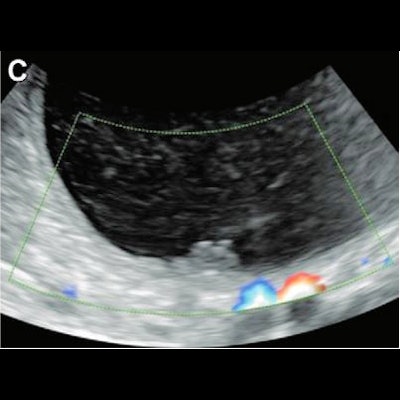

Ultrasound images in 36-year-old woman show a unilocular cyst with a solid component in the right ovary, diagnosed pathologically as a serous cystadenofibroma. (A) Grayscale ultrasound shows the maximal size plane of the mass. (B) Grayscale ultrasound shows the maximal size of the solid component. (C) Color Doppler ultrasound shows the solid component (score 1). This was misdiagnosed by using the Ovarian-Adnexal Reporting and Data System (O-RADS), while both deep-learning models and expert assessment were correct. Images courtesy of the RSNA.

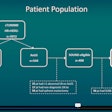

Ultrasound images in 36-year-old woman show a unilocular cyst with a solid component in the right ovary, diagnosed pathologically as a serous cystadenofibroma. (A) Grayscale ultrasound shows the maximal size plane of the mass. (B) Grayscale ultrasound shows the maximal size of the solid component. (C) Color Doppler ultrasound shows the solid component (score 1). This was misdiagnosed by using the Ovarian-Adnexal Reporting and Data System (O-RADS), while both deep-learning models and expert assessment were correct. Images courtesy of the RSNA.The team looked at data from 442 women with an average age of 46.4 years who underwent grayscale and color Doppler ultrasound between January and November 2019. A total of 304 benign and 118 malignant tumors were included. The women were divided into a training and validation data set (337 women) and a test data set (85 women).

The algorithms used included a "decision fusion" and "feature fusion" model, which used ultrasound features based on standardized terms, definitions, and measurements from the International Ovarian Tumor Analysis group.

| Performance of deep-learning models using ultrasound compared to O-RADS and experts | ||||

| O-RADS | Experts | "Decision" AI model | "Feature" AI model | |

| Area under the curve | 0.92 | 0.97 | 0.90 | 0.93 |

| Sensitivity | 92% | 96% | 92% | 92% |

| Specificity | 89% | 87% | 80% | 85% |

Previous studies suggest that some grayscale and color Doppler features are the most important predictor of a malignant ovarian mass. Models used in previous studies, however, were usually trained on an individual category, such as grayscale ultrasound images of an entire lesion.

The Chen team emphasized that their deep-learning "feature" model, which combined grayscale, color Doppler, and images showing solid components, had a higher area under the curve than individually trained models.

The study authors wrote that their models could be further developed to assess lesions found within a screening population. However, they called for multicenter assessments to further develop and validate the models, as well as large prospective studies and "a more reliable method" to better combine image features with nonvisible information.