An artificial intelligence (AI) algorithm can identify and characterize suspicious bone lesions on whole-body bone scintigraphy exams in cancer patients, according to research published online September 4 in BMC Medical Imaging.

A team of researchers led by Yemei Liu of Sichuan University in Chengdu, China, trained and tested a deep-learning model using nearly 15,000 bone lesions on scintigraphy exams from more than 3,000 patients. In testing, their convolutional neural network (CNN) yielded average accuracy of 81.2% for differentiating benign and malignant bone lesions.

"Even though the AI model is not always correct, it could serve as an effective auxiliary tool for diagnosis and guidance in patients with suspicious bone metastatic lesions in daily clinical practice," the authors wrote.

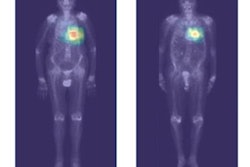

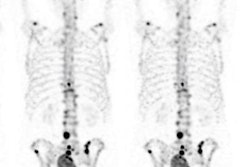

Despite the emergence of SPECT/CT, whole-body bone scintigraphy remains a standard method for evaluating the presence and extent of bone metastasis. However, the modality relies on reader experience for accurate interpretation and suffers from unsatisfactory interobserver diagnostic agreement, according to the researchers.

As a result, they sought to develop a deep-learning algorithm to detect and characterize suspicious bone lesions in cancer patients. The researchers gathered a dataset of technetium-99m methylene diphosphonate (MDP) whole-body bone scintigraphy exams from 3,352 cancer patients with a total of 14,972 bone lesions. These lesions were annotated manually by physicians as either benign or malignant.

The investigators then trained and tested a 2D CNN using five-fold cross-validation and also compared its performance with three other CNN architectures. Overall, the algorithm achieved moderate results for differentiating benign from malignant lesions.

| AI performance for differentiating bone lesions on whole-body bone scintigraphy | |

| Sensitivity | 81.3% |

| Specificity | 81.1% |

| Accuracy | 81.2% |

Those results were higher than three other CNNs tested in the study, including VGG16 (81.1%), InceptionV3 (80.6%), and DenseNet169 (76.7%).

Delving further into the results, the researchers found that the model yielded an area under the curve (AUC) of 0.847 in a subgroup of patients with three or fewer lesions, an AUC of 0.838 in the subgroup of patients with four to six lesions, an AUC of 0.862 in the subgroup of patients with more than six lesions.

By type of cancer, the algorithm produced an AUC of 0.87 for lesions in lung cancer patients, 0.899 for breast cancer patients, and 0.900 for prostate cancer patients.

Although the model doesn't provide perfect performance, it could still be used to aid nuclear medicine physicians in analyzing bone lesions and interpreting exams, especially for patients who aren't able to receive timely SPECT/CT exams, according to the study authors.