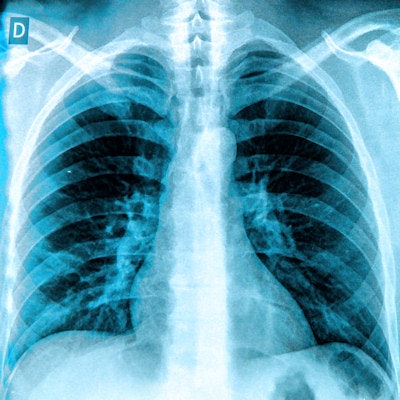

The performance of artificial intelligence (AI) algorithms for classifying chest x-rays dropped when they were used with patient populations that were different from the ones on which they were trained, according to research published online March 5 in the Journal of Digital Imaging.

A team of researchers from Warren Alpert Medical School and Rhode Island Hospital in Providence trained different types of deep-learning algorithms using one of two different chest radiograph datasets, and then compared the performance with the second, unseen dataset. When the researchers tested the algorithms' performance on the external dataset, the models' accuracy -- calculated as an area under the curve (AUC) -- dropped by up to 5.2%.

As a result, institutions considering adopting AI algorithms should first validate their performance on local datasets, according to the team led by Ian Pan of Warren Alpert Medical School.

"We strongly recommend that algorithms be subject to an initial trial period where performance is first evaluated behind the scenes on local data before clinical deployment," the authors wrote. "A thorough understanding of differences between the training data and the implementation site's data is essential for proper clinical implementation of deep-learning models."

The researchers sought to evaluate the effectiveness of convolutional neural networks (CNNs) for detecting abnormalities on chest radiographs and to investigate the generalizability of their models on data from independent sources. They used the DenseNet and MobileNetV2 CNN architectures to train, validate, and test models on one of two datasets: the U.S. National Institutes of Health (NIH) ChestX-ray14 and the Rhode Island Hospital (RIH) chest radiograph datasets.

| AI accuracy for classifying radiographs as normal vs. abnormal | ||

| Performance on NIH dataset | Performance on RIH dataset | |

| NIH-DenseNet AUC | 0.900 | 0.924 |

| RIH-DenseNet AUC | 0.855 | 0.960 |

| Difference in performance on external dataset | -5% | -3.7% |

| NIH-MobileNetV2 AUC | 0.893 | 0.917 |

| RIH-MobileNetV2 AUC | 0.847 | 0.951 |

| Difference in performance on external dataset | -5.2% | -3.6% |

All performance differences were statistically significant (p < 0.05). The researchers said that the results show that CNNs trained on one dataset of chest radiographs are still effective on independently collected chest radiographs but with a slight drop in performance.

"This gives us confidence that CNNs are learning appropriate, generalizable features during the training process but whose predictions are biased to the training data," the authors wrote.

The researchers also noted that the mobile CNN architectures were effective for detecting abnormalities in chest radiographs.

"This opens the door to low-power CNN applications that can be directly embedded into the imaging apparatus itself to streamline the radiology AI workflow," the team added. "These results also suggest that the computational requirements of larger, deeper networks may not justify the performance gain for certain medical imaging classification tasks, particularly in settings with limited resources."