Augmented reality (AR) technologies may be on the verge of delivering a breakthrough in image-guided surgical procedure planning and navigation, according to researchers from Oregon Health and Science University (OHSU).

In a multi-institutional study, researchers led by radiology resident Dr. Ningcheng "Peter" Li of OHSU's Dotter Interventional Institute found that Microsoft's HoloLens 2 could be utilized by users to register holographic 3D image models onto the body within seconds, paving the way for clinical adoption of these techniques.

"Our ultimate objective is to achieve real-time, automatic, and accurate holographic model registration for intraprocedural navigation and guidance," said Li, who presented the award-winning research at the recent American Roentgen Ray Society (ARRS) annual meeting.

3D cross-sectional imaging datasets such as CT and MRI have traditionally been projected onto 2D displays and not visualized in true 3D environments, according to Li. Emerging technologies such as 3D printing and augmented reality are changing that dynamic, however.

"True 3D spatial navigation and visualization have been shown to improve anatomical learning, 3D spatial interpretation, and preoperative planning," Li said.

Pilot implementations of AR technologies have been performed recently across multiple specialties, including neurosurgery, urology, and interventional radiology. However, clinical integration of AR has lagged behind research development, he said.

"This is mostly due to a lack of a good imaging registration process, which is crucial for eventual intraprocedural navigational guidance," he said.

Another hindering factor was the limited capabilities of prior-generation augmented reality headsets. In late 2019, however, Microsoft introduced version 2 of its HoloLens, which brought enhancements such as a more ergonomic headset with a larger field of view, higher processing power, and articular gesture tracking. These design improvements yielded improved 3D spatial navigation and manipulation capabilities, according to Li.

In their study, the researchers sought to evaluate registration time and accuracy of a holographic 3D model onto the real-world environment using HoloLens 2. Testing was performed by three attending radiologists, three trainees, and three medical students at two tertiary academic centers -- OHSU and the Hospital of the University of Pennsylvania.

All participants performed three consecutive registration attempts with each of three methods: a one-handed gesture, a two-handed gesture, and an Xbox controller-mediated approach. The goal was to align a 3D holographic model within 1 cm for each of the CT grid's x, y, and z planes.

Importantly, manual registration techniques decreased by more than 80% on the HoloLens 2 when compared with times previously achieved with the HoloLens 1, according to Li.

However, the automatic method still produced the fastest mean registration time:

- Automatic registration: 5.3 seconds

- Two-handed gesture: 24.3 seconds

- One-handed gesture: 27.7 seconds

- Xbox controller: 72.8 seconds

Delving deeper into the data, the researchers noticed a significant improvement in manual registration times over the three consecutive attempts. For many participants, the third manual registering attempts required less than 10 seconds, a speed approaching that of the automatic registration method. There were also no significant differences in registration time or accuracy among participant experience levels.

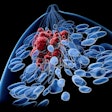

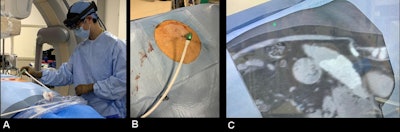

AR-assisted hepatic lesion microwave ablation using the Microsoft HoloLens 2 headset and Medivis SurgicalAR visualization system at OSHU. (A) Dr. Brian Park wearing the Microsoft HoloLens 2 headset during the procedure. (B) Reality visual signals through the operator's eyes. (C) Reality visual signals overlaid with AR 3D hologram generated from a preprocedural CT scan. All images and caption courtesy of Dr. Ningcheng "Peter" Li.

AR-assisted hepatic lesion microwave ablation using the Microsoft HoloLens 2 headset and Medivis SurgicalAR visualization system at OSHU. (A) Dr. Brian Park wearing the Microsoft HoloLens 2 headset during the procedure. (B) Reality visual signals through the operator's eyes. (C) Reality visual signals overlaid with AR 3D hologram generated from a preprocedural CT scan. All images and caption courtesy of Dr. Ningcheng "Peter" Li.The authors acknowledged several limitations to their study, including its evaluation of only stationary targets and assessment of accuracy on a 2D plane. In addition, background lighting and other environmental variables were not controlled for, Li said. The automatic method also suffers from its use of nonsterile image markers, and there was a significant lag between visualization of the CT grid and successful registration with the HoloLens.

To improve on the registration lag times, the researchers have since developed customized codes that enable the HoloLens 2 to recognize two 2 cm printed sterile markers that have simple QR codes. These markers enable near real-time tracking, Li said.

"Imagine using that for holographic model registration on a patient for procedure planning and guidance," he said.

Augmented reality technology may represent another technological breakthrough in procedural visualization, Li said.

"I truly believe that if we could revolutionize the way we visualize, we can revolutionize the way we intervene," Li said.