Nearly one out of every four PACS sold today is a replacement for an existing PACS. Just as people entering second marriages have baggage they bring along with them so, too, do replacement PACS.

That baggage is the studies that reside in the existing archive. These prior studies need to be transferred to the new archive if possible, so they can be accessed by the radiologists for comparison. Unfortunately, this is often much easier said than done.

Many issues relating to data migration need to be considered. First, and foremost, is the way the data are stored. In an ideal situation, image data are stored uncompressed in a DICOM Part 10 file format that includes both image and header information. Unfortunately, ideal situations are typically few and far between, especially dealing with data five years old or older.

Many companies several years ago also used lossy compression in their archives. Even today, nearly all PACS vendors still offer lossy compression as an archive storage option, despite storage costs that have dropped so significantly that the need to compress is all but negated.

The use of lossy compression itself isn't bad, it's just that most lossy compression years ago was highly proprietary in nature (i.e., unique to each vendor) and didn't support the JPEG 2000 standard compression algorithms currently in use today. Even with standards-based compression available, a few vendors today still use proprietary lossy compression. This means that a simple data migration isn't possible without use of a vendor decompression subroutine.

Storing images using lossy compression has yet another downside. Once an image has been compressed with lossy compression it cannot be decompressed and recompressed without exhibiting pixel blocking, which is the creation of artifacts that are not part of the original image. This occurs even when using the newer standards-based JPEG 2000 compression.

One major advantage of using JPEG 2000, though, is that a separate proprietary decompression subroutine isn't required, since most vendors that support JPEG 2000 have the decompression subroutines built into their software, much as they do with standard JPEG images.

While the images are the largest part of data migration to consider, they are also the easiest to address. Two other areas also need to be addressed: the creation of a searchable database and the migration of meta tags.

In the initial archive, the database is usually created from information transferred from the radiology information system (RIS) to the imaging modality and from the modality to the PACS. The RIS helps create some of the DICOM header information that, when combined with information generated from the modality, creates the full DICOM header. This in turn is transferred over to the archive along with the image information.

When doing data migration, you can perform a reconciliation against the RIS if desired. While doing this adds a considerable degree of accuracy, it also significantly slows down the migration and almost doubles the migration cost as well. It is usually unnecessary to reconcile against the RIS if the data came from the RIS initially.

A key variable here is what DICOM elements the vendor supports. DICOM identifies mandatory data elements that need to be supported (study date, user ID, modality, patient name, medical records number, date of birth, etc.) and optional private fields (accession numbers, phone numbers, etc.), and these are created in the DICOM header.

It's unnecessary to have a detailed understanding about DICOM, but it is important to understand that not every vendor captures and stores DICOM header information in the same manner or provides the exact same information either. The existing vendor can have certain data in the DICOM header that are not transferred to the new vendor if unsupported by the new vendor. This is why sharing DICOM-conformance statements is so crucial and knowing what each vendor addresses.

Meta tags deal with things like annotation, identification of key image notes (known as KIN), gray-scale presentation states (GSPS), and other related areas. Virtually every PACS vendor stores these in a proprietary fashion today and transferring them to the new archive is either not possible or exceedingly difficult.

This will no doubt change with the adopt of IHE (Integrating the Healthcare Environment) standards that address these areas, but until then it's a fertile area for software conversion development. Luckily, annotation is used sparingly and key image selection is typically reserved only for MR and CT and then used on a very limited basis.

Many data migration issues need to be addressed as well. Most data migration is notoriously slow, running at a pace of about 100,000 to 150,000 studies per month. If you factor in the time it takes for data cleaning and/or data reconciliation, that number can be easily halved. You also need to factor in the time available to do the migration.

Migration impacts retrievals from the archive and the network as well, so these also play a role in data migration time. This can extend the migration time frame even further if the migration can only be done during low-volume read times (usually 6 p.m. to 8 a.m.). If a facility does 100,000 studies a year, data migration can easily take three to six months to accomplish, perhaps even longer.

In addition, the replacement PACS archive manager also needs to be in place to create the PACS database. The company doing the PACS data migration strips the DICOM header information, which in turn allows the database to be created by the new PACS provider. Obviously, the more information you can provide the better the search parameters in the database will be.

Because of the time it takes to do a data migration, you typically want to do this in descending order (most recent to oldest studies first). That way if the migration takes longer to complete you have the most current studies available.

Basic data migration typical costs between $0.25 and $0.45 per study, and can be significantly higher depending on the options selected, volume and condition of the data being migrated, migration source, and other variables.

Archiving models

Archiving today consists of three different forms: direct-attached storage (DAS), network-attached storage (NAS), and storage area network (SAN). Some vendors store everything on spinning disks (DAS), while the majority uses external archives as their permanent archive (NAS and SAN).

DAS are storage repositories directly attached to servers or workstations, and are also known as spinning disks or hard drives. DAS are typically clustered (devices linked together) and require at least two devices: a server and a storage bay. DAS has limitations in terms of capacity and possesses the capability of "crashing" even with redundancy built in.

DAS solutions also do not allow for the flexible use of storage resources throughout a company or for remote backup of disks that are physically located in your host machine. DAS also consumes a lot of resources to maintain an acceptable uptime level (99.99% or higher).

NAS are file-based devices that "own" the data on it, and are typically connected to a Windows-based computer. NAS devices are specialized servers dedicated to storage that are connected to devices through Ethernet adaptors. Simple to use, the NAS is best suited to file-based data, such as Word documents and spreadsheets, while the SAN is better suited for large amounts of data such as database files and images.

A NAS is typically one device (a server with extended capacity storage and optimized for network sharing) that administers the data accessed by sending protocols over TCP/IP. NAS is less expensive than SAN (roughly one-third the cost), easier to configure, and uses existing network resources. Small implementations of a few nodes are also relatively simple to configure.

The downside of NAS is, as more NAS nodes are added, interconnectivity complexities increasingly require more support effort. For this reason, NAS tends to require support resources in similar proportions as DAS, although the capabilities NAS delivers in scalability, recoverability, security, performance, availability, and manageability are far superior to what is available in DAS environments.

A SAN is a block-based device in which the host also owns the data. The host is in charge of the partitioning, formatting, and access to the logical unit numbers (LUN). You can access a SAN via two protocols: iSCSI (TCP/IP) and/or Fibre Channel (FC).

SAN is the most expensive of the three mediums, almost three times that of NAS, but has the benefit of fast transfer times that are critical for large-sized studies such as multislice CT, digital mammography, and others. SANs are usually the topology of choice for high-speed, large-volume data transfers; however, file-based applications without this requirement may be more effectively implemented and managed on NAS topologies. NAS is able to leverage the existing IP network, thereby avoiding one of the major SAN investments, Fibre Channel.

SAN and NAS solutions have recently benefited from various efficiency methods for maximizing the storage media, making them much more cost-efficient than the traditional DAS solutions. SAN and NAS have also evolved to be complementary rather than competing solutions. NAS solutions, backing up file-based storage, are often the front end of SANs, which in addition to backing up the NAS data to a remote disaster recovery site also back up block-level storage from database-based applications.

When talking about "block-based data," this refers to the access mechanisms used by those applications that get access to their "data" using the small computer systems interface (SCSI ) protocol, used by the operating systems to "talk" to disk drives where the data is stored. The SCSI protocol transmits data in "blocks," rather than the IP protocol, which transmits data in "packets." So block-based data are the data stored on disk drives, in its native format.

File-based data are also stored on disk drives, but access to file-based data is normally done over an Ethernet network rather than a storage network. NAS uses file access protocols over the TCP/IP protocol (underlying network transmission protocol) to "talk" to individual "files" stored on disk drives. File-based data are the actual individual files stored on the drives.

There is much more overhead associated with access to individual files, rather than raw block transfer of data to disks. This is why high performance databases almost always access data using "block" access, rather than "file" access. You can simplify this by always associating "file" access with NAS devices, and "block" access with SAN devices.

Unified storage

Today, many mid- to large-size PACS implementations also use both NAS and SAN solutions from a single server. This is known as unified storage (sometimes referred to as network unified storage or NUS). Unified storage is a system that makes it possible to run and manage files and applications from a single device. To this end, a unified storage system consolidates file- and block-based access in a single storage platform and supports Fibre Channel, IP-based SAN (iSCSI), and NAS.

A unified storage system simultaneously enables storage of file data and handles the block-based input/output (I/O ) of enterprise applications. In actual practice, unified storage is often implemented in a NAS platform modified to add block-mode support. Numerous other products based on Microsoft's Windows Unified Data Storage Server (WUDSS) have been configured to support both block and file I/O.

One advantage of unified storage is reduced hardware requirements. Instead of separate storage platforms, such as NAS for file-based storage and a RAID (redundant array of independent disks) for block-based storage, unified storage combines both modes in a single device. Alternatively, a single device could be deployed for either file or block storage as required.

In addition to lower capital expenditures for the enterprise, unified storage systems can also be simpler to manage than separate products. However, the actual management overhead depends on the full complement of features and functionality provided in the platform. Furthermore, unified storage often limits the level of control in file-based versus block-based I/O, potentially leading to reduced or variable storage performance. For these reasons, mission-critical applications such as healthcare should continue to be deployed on block-based storage systems (i.e., SAN).

Unified storage systems generally cost the same and enjoy the same level of reliability as NAS and SAN alone. Users can also benefit from advanced features, such as replication, although heterogeneous support between different storage platforms should be considered closely. While more companies will no doubt adopt unified storage products, it is likely that dedicated block-based storage systems will remain a popular choice when consistent high performance and fine control granularity are important considerations.

The best archive for you

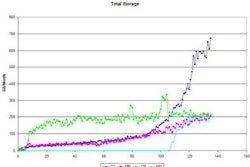

The choices are many in defining what type archive is best for you, but remember to factor in prior studies (which can easily double your overall storage requirements), modality upgrades that increase study file sizes, and the length of time studies need to be saved. The simple act of moving from a 16- to 64-slice CT can easily increase storage requirements tenfold. Pediatric studies have exceptionally long retention requirements, while digital mammography studies consume 40-50 MB per study and require minimal (lossless) compression as well.

There is no real set rule concerning a threshold size of storage that helps you decide whether to use DAS, NAS, or SAN, but all the factors need to be combined to see if it makes sense from a financial perspective. If the benefits of what SAN has to offer outweigh the initial capital outlay, then it should pay for itself in a reasonable period of time. The larger the enterprise, the larger the benefits will usually be.

If you're a small enterprise, have limited data storage requirements, and backup is not a problem, then you probably do not need a SAN. If backup is a challenge or you need to share disks because you are implementing server clustering, or it's a nightmare to manage the data on all your internal storage, then a SAN would make sense.

One other option that can be considered is the use of off-site archive services to provide on-line or near-line image access or as a disaster recovery (DR) option. Also known as SSP’s (Storage Service Providers), these companies store images in centralized off-site storage facilities and transmit images back to the client “on demand” via a high-speed Internet-based network. The off-site facilities also offer redundancies that eliminate the single points of failure that may be present in standard archive implementation. In addition, this model may offer data migration advantages.

It would be remiss to not briefly touch on DR. In a nutshell, there are two types of DR: onsite and offsite. Both offer specific advantages, benefits, and costs associated with them. The form and format really only matter when you are transferring the data from DR back to the archive (tape is slowest and replicated data from a DAS, NAS, or SAN the fastest, but also the costliest as well).

Offsite archival is the safest, but also has a somewhat higher cost associated with it, although providers also typically factor in the cost to repopulate the data to the archive when needed. This is worth its weight in gold in situations such as a natural disaster that destroys the data center. Make sure the database is backed up as well, although, if the data are stored properly, the database can be recreated from the DICOM header information.

Now that you are armed with information about data migration and archive options, do you need to include archive specifications in your RFP? In a word, no. A vendor may offer various archive options, but all you need to do is evaluate your requirements against the total cost of ownership and base your decision accordingly.

By Michael J. Cannavo

AuntMinnie.com contributing writer

October 22, 2007

Michael J. Cannavo is a leading PACS consultant and has authored nearly 300 articles on PACS technology in the past 15 years. He can be reached via e-mail at [email protected].

The comments and observations expressed herein do not necessarily reflect the opinions of AuntMinnie.com, nor should they be construed as an endorsement or admonishment of any particular vendor, analyst, industry consultant, or consulting group. Rather, they should be taken as the personal observations of a guy who has, by his own account, been in this industry way too long.

Related Reading

Part XV: Exploring PACS Secrets -- The eHarmony approach, August 1, 2007

Part XIV: Exploring PACS Secrets -- Reading the fine print, March 12, 2007

The 2006 PACSman Awards: Of Chiclets and cappuccino, November 29, 2006

Part XIII: Exploring PACS Secrets -- Penny-wise, pound-foolish, October 16, 2006

Part XII: Exploring PACS Secrets -- PACS and marriage, August 15, 2006

Copyright © 2007 AuntMinnie.com