Natural language processing (NLP), a form of AI, can uncover racial bias in documenting radiology-confirmed ob/gyn findings, a study published April 21 in Clinical Imaging reported.

Researchers led by Shoshana Haberman, MD, PhD, from the Maimonides Medical Center in Brooklyn, NY, found that NLP-derived data incorporated into a platform for ultrasound or MRI-confirmed uterine fibroids during pregnancy showed that Black patients were more likely to have an obstetrical diagnosis entered late into their patient charts or have missing documentation of the diagnosis.

“All providers should be committed to quality documentation of clinically relevant diagnoses and quality of care, without regard to race,” Haberman and co-authors wrote.

Racial bias can be found in medical records, leading to potentially flawed documentation. The researchers highlighted that finding out whether there are racial disparities in documentation and in quality of care is important for improving performance.

NLP allows for free text-based clinical documentation to be integrated in ways that facilitate data analysis, data interpretation, and formation of individualized medical and obstetrical care.

For their study, Haberman and colleagues identified all births between 2019 and 2021 carrying the radiology-confirmed diagnosis of fibroid uterus in pregnancy 5 cm or larger. These were detected by either pelvic ultrasound or MRI The researchers used an NLP platform and compared it to non-NLP derived data using ICD 10 codes of the same diagnosis. From there, they compared the two sets of data and stratified documentation gaps by race.

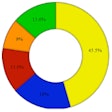

The study included data from 14.441 charts. The non-NLP derived data captured 374 patients, including 217 from ICD 10 codes and 157 from structured problem lists. Meanwhile, NLP-derived data captured 1,000 patients with radiological confirmation of large fibroid uterus in pregnancy. Of the total from the NLP-derived data, 469 patients were Black, 355 were white, and 176 were Asian.

Of the 626 patients who were only detected in the NLP-based query, 595 (95%) had some form of documentation gap. This ranged from late to absent documentation of the diagnosis. The remaining 5% included patients whose charts mentioned fibroids but did not include radiological confirmation.

Finally, the team reported that the following racial breakdown of the 595 patients with documentation gaps and radiologic evidence of fibroids: 428 (72%) in Black patients, 83 (14%) in white patients, and 83 (14 %) in Asian patients (p < 0.001).

The investigators suggested that documentation gaps may partially explain previous reports that noted care disparities in patients with symptoms of fibroid uterus. They noted that those reports described the need to address healthcare disparities in managing uterine fibroids.

The authors also highlighted that NLP could add to improved quality of care with appropriate algorithm definitions, cross-referencing, and thorough validation steps. They added that NLP can also help to overcome documentation bias.

“Our study demonstrates the potential of NLP to identify and address racial bias in medical records, which could improve the diagnosis and treatment of patients with fibroid uterus in pregnancy,” the group wrote.

They also called for future research to evaluate the correlation of documentation gaps detected by NLP to clinical outcomes and determine whether higher rates of documentation gaps lead to worse outcomes.

The full study can be found here.