A quality control (QC) management system can yield important benefits to radiology departments. But long-term success requires ongoing monitoring of its use and training, according to research from the University of Maryland Health System.

After five years of experience with an online radiology QC management system, the Maryland researchers discovered that social factors such as inadequate training and variable use had an increasing impact on the efficacy of the system over time.

"Technology tools require focused attention to make sure that they are being used properly over time," said Christopher Meenan, director of clinical informatics at the University of Maryland School of Medicine and the University of Maryland Medical Center. "It's not enough just to release a QC tool such as this to your department or enterprise and expect it's going to be used consistently without care and nurturing."

He presented the institution's experience with a long-term QC management system for radiology during a session at the recent Society for Imaging Informatics in Medicine (SIIM) meeting in Orlando, FL.

QC can be complicated

Before radiology's digital era, feedback on quality control between radiologists and technologists was relatively straightforward because they worked in close proximity on a day-to-day basis. Now, however, real-time QC can be complicated by the distributed nature of team members, Meenan said.

In 2005, the University of Maryland's department of radiology installed Radtracker, an open-source automated tool designed to track QC issues. The next year, researchers performed a study on the use of the tool to investigate the types and frequency of QC issues that were submitted.

In 2011, the institution realized that use of the tool and the types of QC and workflow issues that were submitted had dramatically changed, requiring a complete re-evaluation of the department's use of the system.

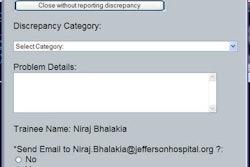

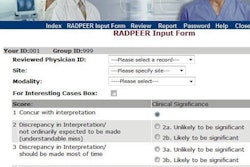

Integrated into the university's PACS client software and available by clicking on a "QC Tool" button, Radtracker pops up as a Web-based form and is prepopulated with exam and user information. Radiologists can select from a list of QC codes and also add comments.

Supervisors receive notification of QC issues and work with the team to resolve the problem, select the root cause if it wasn't selected by a radiologist, and enter updates. These updates are automatically communicated via email.

To examine usage trends and identify opportunities for improvement, the researchers analyzed a three-month dataset from the system in 2011. Overall, 1,690 issues were reported during the period from April to July 2011.

Not completing the exam

The researchers found that the issue responsible for most QC problems was "Patient data: uncompleted in RIS," which was cited for more than half of issues. At their institution, a QC issue is automatically sent if the radiologist has finished the report before the technologist has completed the exam in the RIS, Meenan said.

Additional analysis showed the following top five causes of QC issues:

- Patient data: uncompleted in RIS -- 53.4%

- Communication: radiologist request -- 8.3%

- PACS: missing images -- 6.2%

- Image quality: positioning -- 4.8%

- Patient data: exam performed doesn't match billed exam -- 2.6%

The lack of completion of exams in the RIS is a big problem -- and an issue that the institution is still exploring, he said. Other issues weren't related to technologist behavior, such as radiologists at the breast center using the system as a tool to communicate with technologists.

In addition, the number of missing images in the PACS largely occurred during a migration of the institution's PACS archive. The researchers also found that some categories such as scheduling were used infrequently or not at all.

Tracking and training

Based on the analysis, the team developed a few recommendations.

"What we realized was that it's not enough to implement these information systems," he said. "You have to keep track of how the system is being used and make sure that people know what they're supposed to do."

There were also some training issues to tackle. Technologist supervisors at remote sites who were receiving QC issues were not aware of requirements such as closing tickets and assigning root causes, he said. The researchers also found that some technologist supervisors were worried about the perception of those QC issues and would often reassign them to another QC category class.

"What we realized was that, especially for distributed environments, it was really important to reach out to those technologist supervisors, especially to try to bridge that gap between radiologists and techs or even informaticists and techs who never really meet each other face to face," he said.

Also, some radiologists were not properly trained on the tool and how to use it correctly.

"The person who I trained in 2006 on how to use it had a pretty good idea still," he said. "But the new hire or the new attending who had come in, or one of our fellows or residents, had no idea how to use the tool, and they had other interesting ideas on how it should be used."

Technically, the group decided to clean up the Radtracker form and remove unneeded categories and fields.

"When QC tools such as [Radtracker] or other enterprise tools are used properly, they can have a huge benefit to the department," Meenan said. "But we learned some interesting things about the long-term use of our tool at the University of Maryland."