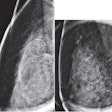

Screening mammography's benefits have been shown in clinical trials, but the full potential of the technology depends on the interpretive skills of the individual radiologists who read the exams, according to a new study published in the April issue of the American Journal of Roentgenology.

Measuring this skill requires the establishment of particular criteria, so that physicians whose performance is less than optimal can be identified and encouraged to get more training, lead author Diana Miglioretti, PhD, from the University of California, Davis, and colleagues wrote.

"Outside the U.S., it's often specialists who do mammography, and they read 2,500 to 5,000 exams per year. Here, the minimum guidelines are 480 exams per year. If there are five cancers per 1,000 exams, some U.S. radiologists would only see two per year," Miglioretti told AuntMinnie.com. "With this study, we wanted to come up with criteria that could identify radiologists who aren't doing as well as they could be in interpreting mammograms, and might need additional training."

Diana Miglioretti, PhD, from University of California, Davis.

Diana Miglioretti, PhD, from University of California, Davis.The study updates previous work that Miglioretti's group published in 2010 in Radiology, which laid out criteria for acceptable interpretive performance of screening mammography, specifically sensitivity, specificity, cancer detection rate, recall rate, and positive predictive value of recall (May 2010, Vol. 255:2, pp. 354-361).

Why the need to update? Because performance results for each of the criteria are not valued equally, according to the authors. A better way of measuring performance may be through combined criteria, they wrote.

"After our first paper, we received some comments that indicated that these benchmarks can't be taken separately," Miglioretti said. "For example, maybe it's OK to have a high recall rate if you're finding a lot of cancers. So for this research, we considered the various factors in combination."

The group created two sets of criteria as the basis for calculating performance:

- Sensitivity and specificity for facilities with access to a complete list of breast cancers through links with cancer registries

- Cancer detection rate, recall rate, and positive predictive value of recall for facilities that cannot capture false-negative cancers but have reliable cancer follow-up data for positive mammograms only

Six breast imagers who had been involved in the 2010 research used a process based on the Angoff method (which helps test developers determine a test's passing percentage). The breast imagers initially identified their ranges for minimally acceptable performance for both sensitivity and specificity; then they also considered data that outlined actual performance of a sample of radiologists participating in the Breast Cancer Surveillance Consortium (BCSC) (AJR, April 2015, Vol. 204:4, pp. W486-491).

Miglioretti and colleagues used these findings to update their parameters of acceptable mammography performance, and they analyzed the performance of the BCSC radiologists to compare how they did under both the old criteria and the two new sets of criteria. Of 486 BCSC radiologists included in the data, 13% read between 1,000 and 1,499 mammography exams, 22% read 1,500 to 2,499 exams, 27% read 2,500 to 4,999 exams, and 38% read 5,000 or more mammography exams.

| Radiologists meeting criteria for acceptable mammography performance | |||

| Criteria | Sensitivity | Specificity | BCSC radiologists who met criteria (%) |

| Original 2010 | ≥ 75 | 88-95 | 51 |

| Updated criteria 1 | ≥ 80 | ≥ 85 | 62 |

| Updated criteria 2 | 75-79 | 88-97 | 7 |

The group also established a third criteria category that considered cancer detection rate, recall rate, and positive predictive value of recall in combination with data from the 486 BCSC radiologists.

| Radiologists meeting revised criteria for acceptable mammography performance | ||||

| Criteria | Cancer detection rate (%) | Recall rate (%) | Positive predictive value of recall (%) | BCSC radiologists who met criteria (%) |

| Original 2010 | ≥ 2.5/1,000 | 5-12 | 3-8 | 40 |

| Updated criteria 1 | ≥ 6/1,000 | 3-20 | -- | 13 |

| Updated criteria 2 | ≥ 4 to < 6/1,000 | 3-15 | ≥ 3 | 31 |

| Updated criteria 3 | 2.5 to < 4/1,000 | 5-12 | 3-8 | 18 |

Art, not science?

Evaluating performance measures is a task that requires some discernment, Miglioretti told AuntMinnie.com.

"In our 2010 research, we suggested that radiologists need to have a false-positive rate between 5% and 12%," she said. "So if you have a false-positive rate below that, it's possible that you're not finding enough cancers -- your sensitivity is too low. But on the other hand, maybe it's OK to have a false-positive rate of 4% if your sensitivity is high. So it's a challenge."

How can practices use these guidelines? One idea is to plug them into their yearly Mammography Quality Standards Act (MQSA) audit, according to Miglioretti.

"A practice could add these benchmarks and flag those radiologists who don't meet the criteria -- as a place to start a discussion," she said. "Just because a radiologist isn't meeting criteria doesn't mean anything is wrong necessarily, but it merits further investigation."

Once a radiologist has been identified as needing further training, there are a number of ways to provide this, including continuing education courses or practice testing offered by the American College of Radiology (ACR), Miglioretti said. But the best way for a radiologist to improve mammography interpretation performance may be getting a second opinion from colleagues -- or working up their own findings via biopsy.

"Reviewing cases with colleagues is a great way to get feedback," she said. "And we've found in other research we've done that when radiologists work up their own findings, they learn from them."

Despite a focus on new technologies such as digital breast tomosynthesis, mammography still has much to offer, she added.

"Digital mammography is a really good tool, and if we could train people to be better readers, it would make a big impact on women," she said. "Using these performance parameters is an easy way for practices to evaluate their radiologists' performance."