PACS administrators face no shortage of problems in their daily job responsibilities, and the situation isn't likely to change any time soon. New technology can provide promising tools to help solve these challenges, but technology is rarely ever the solution on its own.

That's according to a presentation by Skip Kennedy from Kaiser Permanente Medical Group of Sacramento, CA, on the top 10 PACS problems faced by PACS administrators. Kennedy spoke during a session at the Society for Imaging Informatics in Medicine (SIIM) meeting in Minneapolis earlier this month.

"Technology is simply a tool," Kennedy said. "It's our job to provide the solutions."

Because every system has scheduled and unscheduled downtime, system availability and uptime are important issues that need to be considered in advance. Alternate workflows need to be established, Kennedy said.

Kennedy recommended that institutions consider a business continuity system as an alternate mechanism to maintaining essential functions during downtime. This can be as simple as deploying a small public domain miniPACS, or as sophisticated as using a fully redundant primary PACS network, according to Kennedy.

It's important to distinguish between the mean "time to failure" metric and mean "time to repair" metric -- i.e., how fast the system can get back up and running versus how many hours it will last, Kennedy said.

"Mean time of repair often gets not as much focus in terms of our analysis and evaluation of systems," he said. "Something that we realized in our [request for proposal (RFP)] is that really expensive systems sometimes can take a very long time to restore."

Communications

Communications with the user community and change management are other problem areas that can pop up. It's important not to just send out a "send-all" e-mail to department heads, which can cause problems if that information isn't shared with all of the staff in their departments, Kennedy said.

As a result, multiple methods of communication should be utilized, including phone calls and even posters. "You cannot overcommunicate," he said. Also, anticipate misinformation and manage around it, he added.

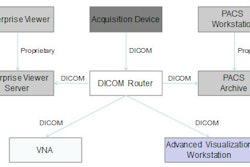

Image communication difficulties can also be a thorn in the side of a PACS administrator. Kennedy suggested diagnosing the problem from the bottom to the top: First rule out network issues before determining if it's a DICOM layer issue.

If it is a DICOM matter, it's important to note that the majority of DICOM problems today are primarily configuration problems, Kennedy said.

"It's not that we don't have compatibility or compliance issues, but they are much less frequent than they were 10 years ago," he said. "Most of our problems tend to be miscommunications and misconfigurations."

PACS administrators frequently receive complaints that the system is too slow, which is often a subjective measure based on individual expectations.

"One of the CT applications people I worked with many years ago gave me a wonderful piece of advice, and that's to always carry a stopwatch," Kennedy said. "I do that still, because sometimes it's very hard to convince [a person who thinks it takes 35 seconds to load a CT scan]. But the stopwatch says five [seconds], and people will believe stopwatches."

Though resetting customer perceptions and expectations is sometimes a part of the job, PACS administrators also need to have better performance metrics, Kennedy said. The ability to have real-time performance metrics and tools as native offerings built into PACS products should be expected, rather than having institutions to build their own, he said.

In good news, developments in scaling technology (including storage and processing virtualization) are of great help to PACS administrators seeking to improve performance.

"We can throw resources at problems that 10 years ago were simply not available," he said. "As a result, we can pose problems and solve them when we couldn't [before]. There are things that cost a million dollars not that many years ago that now can be done for 1/100th or 1/1000th of that price. Many of our PACS vendors haven't really leveraged that, but we're essentially on the tipping point in starting to see heavy use of virtualization."

Quality assurance

Quality assurance problems are part of every PACS administrator's daily activities, but there is also a shift under way from the "fix it in the back room" model to a "just get it right first" approach, Kennedy said.

Ultimate responsibility for data integrity should rest at the imaging modality itself, he said. This concept hasn't been successful in the past, as QA tools haven't been available for technologists. The idea of point-of-care QA, however, continues to advance as these tools continue to be developed.

Presentation of grouped procedures (PGP) also remains a challenge, despite the availability of tools such as the Integrating the Healthcare Enterprise's PGP integration profile, Kennedy said.

With other nonradiology imaging content increasingly becoming part of PACS, administrators are finding that they're dealing more and more with other specialties such as cardiology, dermatology, and ophthalmology, Kennedy said.

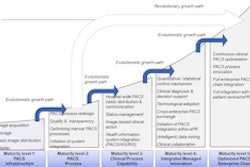

When it's time for a new PACS, even more issues come up, including PACS-to-PACS migration issues and the debate of whether to consider a PACS with a vendor-neutral archive.

PACS hardware and software continue to be constantly updated. Hardware replacement is moving away from a "big bang" model (all of the hardware is replaced at one time) to a modern model, in which the hardware platform is viewed as separate from the application. This approach allows for hardware to be refreshed more frequently, Kennedy said.

More tools are becoming available to provide performance metrics, including real-time dashboards. PACS analytics is a trend that will continue in the future, he said.

It's also important to manage user expectations and provide education, Kennedy said. If a system needs to be extended to provide new capabilities, Kennedy recommends against software customization if possible.

"Most of us have discovered that custom code is expensive, not just in terms of what it costs to have it written but in terms of the maintenance and the lifecycle cost of custom code," he said.

The use of "bolt-ons," or plug-ins to an application, could also be considered. And also weigh changing workflow rather than the system itself, Kennedy said.

"It's a very human tendency to say this is how we have always done it, and this is how we should always do it, and the system should do it the way we've always done it rather than reconsidering some of the options," he said. "Maybe you don't really need that piece of paper that we then scan in the system; maybe there's an option for including that in the system itself."

By Erik L. Ridley

AuntMinnie.com staff writer

June 28, 2010

Related Reading

PACS, advanced visualization integration continues to evolve, June 17, 2010

PACS: A culprit in radiology commoditization? June 9, 2010

SIIM speakers describe new frontier of business analytics, June 4, 2010

SIIM opening session highlights advances driving informatics, June 3, 2010

New software facilitates mining of image data, May 24, 2010

Copyright © 2010 AuntMinnie.com