J Thorac Imaging 1999 Jan;14(1):37-50Pulmonary disease in the immunocompromised child.

Pennington DJ, Lonergan GJ, Benya EC

Department of Radiology, Indiana University Medical Center, Indianapolis 46202-5200, USA.

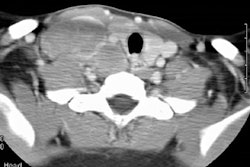

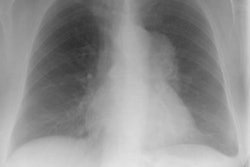

Immune deficiency states in children may be related to primary immunodeficiency syndromes or secondary disorders of the immune system. The secondary immunodeficiencies in children include human immunodeficiency virus-associated acquired immunodeficiency, as well as immunosuppression secondary to antineoplastic chemotherapeutic agents, bone marrow transplantation, and drugs given to prevent transplant rejection. This article discusses the common primary and secondary immunocompromised states of childhood, with emphasis on their attendant infectious, lymphoproliferative, and neoplastic complications.

Publication Types:- Review

- Review, tutorial

PMID: 9894952, UI: 99110243

Lymph > LIP

Latest in Lymphoproliferative

Lymph > Nodular lymphoid hyperplasia

February 12, 2014

Lymph > Pulmonary lymphangiomatosis

January 3, 2010

Lymph > Pulmonary lymphangiectasis

January 3, 2010

Lymph > General

April 2, 2002