ORLANDO, FL - Ask four people to describe an event they just witnessed and they're likely to come up with four similar, yet different, portrayals. The nuances in their language provide for a unique vision of their recollections. Unfortunately, when it comes to case reporting in radiology, these language variables limit the utility of report content, according to a presentation yesterday afternoon at the Society for Computer Applications in Radiology (SCAR) annual meeting.

Dr. Bruce Reiner and colleagues from the Veterans Affairs Maryland Health Care System in Baltimore recently conducted a study to determine whether the essential information content within traditional text-based radiology reports was consistent and reproducible between radiologists at the institution, regardless of experience and training.

They retrospectively selected 300 chest radiography reports from the PACS archive of the Baltimore Veterans Affairs Medical Center. According to the researchers, these included both portable and erect exams from admitted patients and outpatients.

The team evaluated the information content areas from the reports, including the presence or absence of pathology, frequency and type of abnormal findings reported, reporting of pertinent negatives, and word count. Next, this information was compared with the radiologist's profile, which included age, experience, and training of the reporting radiologist.

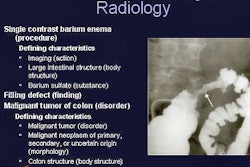

The group then mapped the descriptive terms the radiologists had used in their reports of abnormal findings to the current RadLex lexicon by consensus of three American Board of Radiology-certified radiologists, according to the researchers. (The lexicon was developed by the RSNA in collaboration with the Fleischner Society, the Society of Thoracic Radiology, and the American College of Radiology.)

"The number of reported pathologic findings was inversely proportional to the age and experience level of the radiologists," Reiner said.

In addition, the group reported that the number of findings, observations, and recommendations the radiologists made varied substantially, despite an overall consensus on diagnosis.

The research team found that the subspecialty-trained thoracic radiologist who participated in the study was found to have the fewest number of pathologic findings per report and the shortest word count when compared with the general radiologists evaluated.

The report length varied from a high of just over 68 words to slightly more than 50 words to describe similar findings. Overall, 61% of the cases were positive and 39% were negative.

However, in 64% of the positive cases, the reports contained what Reiner described as qualifiers. He said the team found that the three most used qualifiers were "appears to be," "suspected to be," and "suspicious of."

"Two out of three positive reports contained qualifiers," Reiner observed. "It's no wonder that clinicians are expressing dissatisfaction with our reports."

The use of qualifiers can also be problematic for data mining of text-based report content, he said. The use of natural language processing tools, currently used primarily to extract billing information, could be used to explore clinical applications of text-based radiology reports such as data mining, research queries, patient management, detection of adverse events, quality assessment, and decision support, he explained.

In response to a question from the audience as to what terms radiologists should use to express their degree of certainty with a diagnosis, Reiner recommended a numeric-based structure to express certainty, with 1 being the least certain and 5 being the most certain.

Reiner noted that the study was limited to an evaluation of four academic radiologists with a small sample size of one study type from a limited patient demographic group. However, the team plans on conducting additional work in the near future to investigate report variability among different radiologist groups, institutions, and patient populations. Their goal is to aid in the development and optimization of new structured reporting applications, which have the potential to improve report content and clarity.

By Jonathan S. Batchelor

AuntMinnie.com staff writer

June 3, 2005

Related Reading

Imaging terminology helps distinguish breast tumor types, April 21, 2005

Studies critique radiologists' reports in chest x-ray, knee MRI, April 19, 2005

New tool automates unstructured report analysis, February 18, 2005

Automated report tracking serves clinical, educational goals, January 18, 2005

Rads urged to standardize reporting of vertebral fractures, November 23, 2004

Copyright © 2005 AuntMinnie.com