Radiologists think that most audit statistics for mammography performance are useful, but presenting performance data graphically -- with clear benchmark data included -- makes the information easiest to grasp, according to a study to be published in the July issue of the American Journal of Roentgenology.

Erin Aiello Bowles and Berta Geller, researchers at the Group Health Center for Health Studies in Seattle and the University of Vermont in Burlington, respectively, performed a study that examined radiologists' understanding of audit measures and explored various formats for providing audit data (AJR, July 1, 2009, Vol. 193:1, pp. 157-164).

They conducted focus groups and interviews of 25 radiologists practicing mammography between September 2007 and February 2008. All the radiologists who participated in the study practiced at three of the five sites that make up the Breast Cancer Surveillance Consortium (BCSC): Group Health in western Washington, Vermont Breast Cancer Surveillance System, and New Hampshire Mammography Network.

Bowles and Geller also reviewed mammography outcome audit reports from all five current BCSC sites, as well as one former site and four members of the International Cancer Screening Network (U.K., Tasmania, Canada, and Israel), comparing each report with the American College of Radiology's (ACR) Basic Clinical Relevant Mammography Audit.

The ACR's audit performance measures include:

- Recall rate

- Abnormal interpretation rate

- Recommendation for biopsy or surgical consultation

- Known false negatives

- Sensitivity

- Specificity

- Positive predictive value 1: Percentage of all positive screening exams that result in cancer diagnosis within one year

- Positive predictive value 2: Percentage of all screening or diagnostic exams recommended for biopsy or surgical consultation that result in a cancer diagnosis within one year

- Positive predictive value 3: Percentage of all biopsies done after a positive screening or diagnostic examination that result in a cancer diagnosis within one year

- Cancer detection rate

- Minimal cancers

- Node-negative cancers

Almost all the radiologists who participated in the study thought these performance measures were important to their practice; they considered recall rate, sensitivity, and a list of known false-negative exams as the most important.

All the radiologists said that peer comparison data were helpful in understanding how well they were doing and identifying areas of improvement: comparisons with peers, comparisons with national guidelines, and comparisons of their own performance over time.

"[I like] comparing [my performance] to my colleagues rather than the state because I think we generally have higher recall rates than the rest of the state," one participant wrote, according to the study. "So I want to make sure I'm in line with my group."

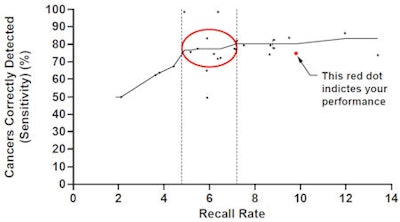

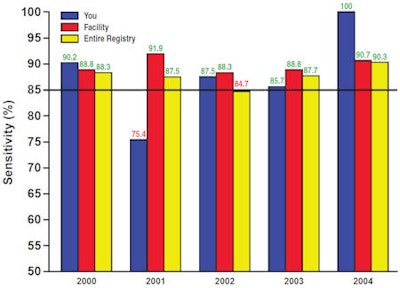

The group overwhelmingly preferred graphic displays of data rather than tables, particularly one that described the relations between performance measures (recall by sensitivity scattergram), and another that compared trends in performance over time and between individuals and their peers (vertical bar graph).

|

| Examples of audit reports presented during focus groups and interviews. Above, recall by sensitivity scattergram. Below, vertical bar graph with benchmark (85%) shows sensitivity among screening examinations. A fourth vertical bar representing sensitivity data for BCSC was added to this figure in subsequent focus groups. Images courtesy of the American Roentgen Ray Society |

|

"Radiologists consider themselves 'visual' individuals and find it easier to see trends in performance on a graph than in a table," Aiello Bowles wrote.

The participating radiologists also thought that providing national benchmarks within the audit report made it easier to see areas of mammography performance that need improvement, according to Aiello Bowles.

"[The radiologists] thought that benchmarks could provide a way for them to see whether they were 'veering off from typical practice standards,' " she wrote.

By Kate Madden Yee

AuntMinnie.com staff writer

June 19, 2009

Related Reading

ACR introduces National Mammography Database, May 7, 2009

Breast imagers get savvy as healthcare reform looms, April 29, 2009

Outcomes data add accuracy to mammography interpretations, April 20, 2009

Mammography as an outpatient revenue generator? You bet, April 9, 2009

Exceeding 'excellent': How breast centers track quality, February 17, 2009

Copyright © 2009 AuntMinnie.com