ChatGPT has been effective in 84% of radiology research studies, yet it is still too soon to confirm its complete proficiency for clinical applications, according to a team at the University of California, Los Angeles.

A group led by Pedram Keshavarz, MD, performed a systematic literature review and found that 37 out of 44 (84.1%) radiology studies show ChatGPT's effectiveness, yet none suggested its unsupervised use in clinical practice.

“Although ChatGPT seems to have the potential to revolutionize radiology, it is too soon to confirm its complete proficiency and accuracy,” the group wrote in a study published April 27 in Diagnostic and Interventional Imaging.

The use of ChatGPT has elicited a debate about the advantages and disadvantages of AI technologies in routine clinical practice, including concerns about potential biases in its training data that could constrain its use, according to the researchers.

To that end, the group conducted a systematic review of studies published up to January 1, 2024 that reported on the performance of ChatGPT. The researchers aimed to identify its potential limitations and explore future directions for its integration and optimization.

The review identified 44 studies that evaluated the performance of ChatGPT, with 37 reporting high performance. Of these, 24 (54.5%) reported the proportion of ChatGPT's performance: 19 studies recorded a median accuracy of 70.5%, and in five, there was a median agreement of 83.6% between ChatGPT's outcomes compared to radiologists’ decisions and/or guidelines as reference standards.

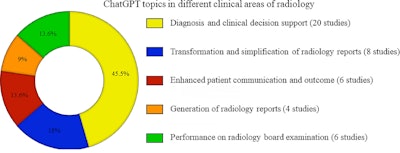

Summary of ChatGPT's topics in five different clinical areas of radiology (n = 44). Image courtesy of Diagnostic and Interventional Imaging.

Summary of ChatGPT's topics in five different clinical areas of radiology (n = 44). Image courtesy of Diagnostic and Interventional Imaging.

In addition, ChatGPT significantly improved radiologists' decision-making processes (14/20; 70%), structured and simplified radiology reports (8/8; 100%), enhanced patient communication and outcomes (5/6; 83.3%), generated radiology reports (4/4; 100%), and performed on radiology board examinations (6/6; 100%).

However, seven studies highlighted concerns with ChatGPT's performance, noting inaccuracies in providing information on diagnosis and clinical decision support in six (6/44; 13.6%) and in patient communication and content delivery in one (1/44; 2.3%).

Ultimately, none of the studies suggested ChatGPT’s unsupervised use in clinical practice, the researchers wrote.

Other risks and concerns about using ChatGPT observed in the studies included biased responses, limited originality, and the potential for inaccurate information leading to misinformation, hallucinations, improper citations and fake references, cybersecurity vulnerabilities, and patient privacy risks, the authors noted.

“It is too soon to confirm its complete proficiency and accuracy, and more extensive multicenter studies utilizing diverse datasets and pretraining techniques are required to verify ChatGPT's role in radiology,” the group concluded.

The full study is available here.