ChatGPT shows potential as a valuable diagnostic tool in radiology, based on a passing score on “Diagnosis Please” quizzes from 1998 to 2023, according to a study published July 18 in Radiology.

A group at Osaka Metropolitan University in Osaka, Japan, put GPT-4 to the test on 313 case-based diagnostic imaging quizzes previously published in Radiology. They found that the large language model achieved an overall accuracy of 54%.

"Identifying differential diagnoses and settling on a final diagnosis can be challenging for radiologists. ChatGPT may lighten their workload by delivering immediate and dependable diagnostic outcomes," wrote first author Dr. Daiju Ueda, PhD, and colleagues.

"Diagnosis Please" is a diagnostic imaging CME activity published by RSNA and based on cases published in its Radiology journal. The platform offers real-world imaging cases, with users making assessments based on a list of possible diagnoses and then comparing their findings with expert interpretations.

As ChatGPT can't process images, the researchers input patient history and descriptions they wrote of imaging findings from 313 "Diagnosis Please" quizzes. ChatGPT was then asked to list differential diagnoses first based only on the patient's history, then based only on descriptions of the imaging findings, and then based on both of these. Finally, the chatbot was asked to generate a diagnosis.

Two experienced radiologists compared ChatGPT's generated diagnoses with the published diagnoses.

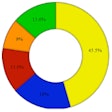

ChatGPT's accuracy in making differential diagnoses from patient histories was 22% (68/313) and 57% (177/313) from image finding descriptions. From both, it achieved an accuracy of 61% (191/313) and on final diagnoses it achieved an accuracy of 54% (170/313), according to the findings. In addition, ChatGPT's highest accuracy was in cardiovascular radiology cases at 79% (23/29) and its lowest accuracy was in musculoskeletal radiology at 42% (28/66).

"This study indicates the potential of ChatGPT as a decision support system in radiology," the authors wrote.

The authors noted limitations, namely that the "Diagnosis Please" platform is a controlled setting that may not reflect real-world complexities, and that the use of author-written findings may have contributed to ChatGPT's relatively high performance. Nonetheless, ChatGPT shows promise, they wrote.

"This tool could prove particularly beneficial in circumstances where there is a shortage of radiologists," the group suggested.

Lastly, the authors acknowledged that they used ChatGPT to generate a portion of the manuscript, and that the output was confirmed by the authors.

The full article was published July 18 in Radiology.